Artificial Intelligence

In Tooth and Claw, Season 2 Episode 2 of Doctor Who 2.0, we see the formation of The Torchwood Institute and the banishing of The Doctor (and Rose) from the United Kingdom. Fat lot that does. Anyway, we also see Queen Victoria make mention of the multiple attempts at her assassination. I suppose it is understandable that some eight or nine (nine if you count the werewolf) attempts were made on her life. She was a women in charge of men in the most patriarchal culture ever (the White West generally, not just UK). They also said "Lock her up!" All the time, and there was a never ending…

Not yet.

As you know, JK Rowling is the author of the famous Harry Potter series of books (Harry Potter and the Sorcerer's Stone, etc.), and more recently, of a series of really excellent crime novels (if you've not read them, you need to: The Cuckoo's Calling, The Silkworm, and Career of Evil, with a fourth book on the way, I hear).

Intuit's Max Deutsch fed the seven Harry Potter books to a computer and told it to write a new chapter. It did. It came out as gibberish.

However, it isn't just random gibberish. When you read the new text, you can see that the dialog for each character is…

The idea of “seeing the world through the eyes of a child” takes on new meaning when the observer is a computer. Institute scientists in the Lab for Vision and Robotics Research took their computer right back to babyhood and used it to ask how infants first learn to identify objects in their visual field.

How do you create an algorithm that imitates the earliest learning processes? What do you assume is already hard-wired into the newborn brain, as opposed to the new information it picks up by repeated observation? And finally, how do you get a computer to make that leap from a data-crunching…

How to Create a Mind: The Secret of Human Thought Revealed is Ray Kurzweil's latest book. You may know of him as the author of The Singularity Is Near: When Humans Transcend Biology. Kurzweil is a "futurist" and has a reputation as being one of the greatest thinkers of our age, as well as being One of the greatest hucksters of the age, depending on whom you ask. In his new book...

Kurzweil presents a provocative exploration of the most important project in human-machine civilization—reverse engineering the brain to understand precisely how it works and using that knowledge to create even…

Last week, I wrote a piece for Motherboard about an android version of the science fiction writer Philip K. Dick. The story of the android is truly surreal, stranger than even Dick's flipped-out fiction, and I recommend you pop over to Motherboard and mainline it for yourselves. For the piece, I interviewed the lead programmer on the first version of the PKD Android, Dr. Andrew Olney. Aside from bringing science fiction legends back from the dead, Olney is an Assistant Professor in the Department of Psychology at the University of Memphis and Associate Director of the same university's…

Stimulating the brain with high frequency electrical noise can supersede the beneficial effects observed from transcranial direct current stimulation, either anodal or cathodal (as well as those observed from sham stimulation), in perceptual learning, as newly reported by Fertonani, Pirully & Miniussi in the Journal of Neuroscience. The authors suggest that transcranial random noise stimulation may work by preventing those neurophysiological homeostatic mechanisms that govern ion channel conductance from rebalancing the changes induced by prolonged practice on this perceptual learning…

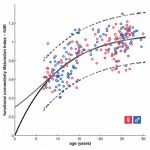

In last week's Science, Dosenbach et al describe a set of sophisticated machine learning techniques they've used to predict age from the way that hemodynamics correlate both within and across various functional networks in the brain. As described over at the BungeLab Blog, and at Neuroskeptic, the classification is amazingly accurate, generalizes easily to two independent data sets with different acquisition parameters, and has some real potential for future use in the diagnosis of developmental disorders - made all the easier since the underlying resting-state functional connectivity data…

Two seemingly contradictory trends characterize brain development during childhood and adolescence:

Diffuse to focal: a shift from relatively diffuse recruitment of neural regions to more focal and specific patterns of activity, whether in terms of the number of regions recruited, or the magnitude or spatial extent of that recruitment

Local to distributed: a shift in the way this activity correlates across the brain, from being more locally arranged to showing more long-distance correlations.

In this post I will describe some of the most definitive evidence for each of these developmental…

"What we're seeking is not just one algorithm or one cool new trick - we're seeking a platform technology. In other words, we're not seeking the entirety of a collection of point solutions, what we're seeking is a platform technology on which we can build a wide variety of solutions."

Dharmendra Modha, manager of cognitive computing at IBM Research Almaden, discusses the Systems of Neuromorphic Adaptive Plastic Scalable Electronics ("SyNAPSE") project. Mad scientist eyes are also on display:

Video after the jump:

Hat tip to Dave Jilk for pointing this out. See also his eCortex work.

Recent work has leveraged increasingly sophisticated computational models of neural processing as a way of predicting the BOLD response on a trial-by-trial basis. The core idea behind much of this work is that reinforcement learning is a good model for the way the brain learns about its environment; the specific idea is that expectations are compared with outcomes so that a "prediction error" can be calculated and minimized through reshaping expectations and behavior. This simple idea leads to exceedingly powerful insights into the way the brain works, with numerous applications to…

How can we enhance perception, learning, memory, and cognitive control? Any answer to this question will require a better understanding of the way they are best enhanced: through cognitive change in early development.

But we can't stop there. We also want to know more about the neural substrates that enable and reflect these cognitive transformations across development. Some information is provided by developmental neuroimaging, but even that's not enough, because the real question we have can only be answered via mechanisms ("how"/"why") - quite different than the "what" "where" and "…

What if we got the organization of prefrontal cortex all wrong - maybe even backwards? That seems to be a conclusion one might draw from a 2010 Neuroimage paper by Yoshida, Funakoshi, & Ishii. But that would be the wrong conclusion: thanks to an ingenious mistake, Yoshida et al have apparently managed to "reverse" the functional organization of prefrontal cortex.

First things first: the task performed by subjects was very tricky. Yoshida et al asked subjects to sort three stimuli, which were presented simultaneously. Each of the three differs from each other in three ways: number of…

Hierarchical views of prefrontal organization posit that some information processing principle, and not just task difficulty, determines which areas of prefrontal cortex will be recruited in a given task. Virtually all information processing accounts of the prefrontal hierarchy are agreed on this point, though they differ in whether the operative principle is thought to be the temporal duration over which information must be maintained, the relational complexity of that information, the number of conditionalities necessary to consider in behaving on that information, or the inherent…

If you can't make it to the Tishman Auditorium in New York tonight to catch the highly anticipated program The Limits of Understanding, we've got you covered. The event will be streaming live, but we'll also be there to cover it, so follow along with the commentary alongside the video stream, and feel free to join in! The event starts at 8:00 EST. Moderator Sir Paul Nurse, a Nobel Laureate and President of Rockefeller University, will join mathematician Gregory Chaitin, philosopher Rebecca Newberger Goldstein, astrophysicist Mario Livio, and A.I. pioneer Marvin Minsky in a discussion about…

Artificial Intelligence as a term implies that there is a "natural" intelligence we wish to replicate in the lab and then engineer in any one of several practical contexts. There is nothing in the term that implies that "intelligence" be human, but the implication is clear that such a thing as "intelligence" exists and that we have some clue as to what it is.

But it might not, and we don't.

Read the rest here...

I've been busy writing up a new paper, and expect the reviews back on another soon, so ... sorry for the lack of posts. But this should be of interest:

The Dana Foundation has just posted an interview with Terrence Sejnowki about his recent Science paper, "Foundations for a New Science of Learning" (with coauthors Meltzoff, Kuhl & Movellan). Sejnowski is a kind of legendary figure in computational neuroscience, having founded the journal Neural Computation, developed the primary algorithm in independent components analysis (infomax), contrastive hebbian learning, and played an early…

Most computational models of working memory do not explicitly specify the role of the parietal cortex, despite an increasing number of observations that the parietal cortex is particularly important for working memory. A new paper in PNAS by Edin et al remedies this state of affairs by developing a spiking neural network model that accounts for a number of behavioral and physiological phenomena related to working memory.

First, the model: Edin et al simulate the intraparietal sulcus (IPS; with ~1000 excitatory units and 256 inhibitory units) and the dorsolateral prefrontal cortex (dlPFC;…

A principal insight from computational neuroscience for studies of higher-level cognition is rooted in the recurrent network architecture. Recurrent networks, very simply, are those composed of neurons that connect to themselves, enabling them to learn to maintain information over time that may be important for behavior. Elaborations to this basic framework have incorporated mechanisms for flexibly gating information into and out of these recurrently-connected neurons. Such architectures appear to well-approximate the function of prefrontal and basal ganglia circuits, which appear…

Reductionism in the neurosciences has been incredibly productive, but it has been difficult to reconstruct how high-level behaviors emerge from the myriad biological mechanisms discovered with such reductionistic methods. This is most clearly true in the case of the motor system, which has long been studied as the programming of motor actions (at its least reductionistic). However, as pointed out by Mars et al., the brain is almost constantly enacting motor plans, and so the initiation of actions is more likely preceded by motor reprogramming than anything else. However, that process has…

There's little evidence that "staging" the training of neural networks on language-like input - feeding them part of the problem space initially, and scaling that up as they learn - confers any consistent benefit in terms of their long term learning (as reviewed yesterday).

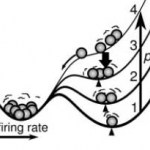

To summarize that post, early computational demonstrations of the importance of starting small were subsequently cast into doubt by numerous replication failures, with one exception: the importance of starting small is replicable when the training data lack temporal correlations. This leads to slow learning of the…