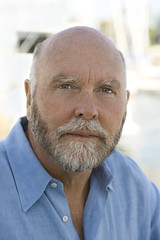

Four scientists - including the omnipresent J. Craig Venter (left) - have penned an opinion piece in the latest issue of Nature based results from five individuals genotyped by two separate personal genomics companies. The article highlights some deficiencies in the way that genetic data are currently used by direct-to-consumer companies to generate risk predictions and to present them to customers.

Four scientists - including the omnipresent J. Craig Venter (left) - have penned an opinion piece in the latest issue of Nature based results from five individuals genotyped by two separate personal genomics companies. The article highlights some deficiencies in the way that genetic data are currently used by direct-to-consumer companies to generate risk predictions and to present them to customers.

The identity of the tested individuals isn't made explicit in the article, except to note that there were two males and two females from the same family and one unrelated female. All of the individuals were tested by the companies

23andMe and

Navigenics, which examine ~580,000 and ~923,000 sites of common genetic variation (SNPs), respectively. It's worth noting that in both cases the scans were performed before the companies were required to comply with CLIA standards (meaning that genotyping accuracy may have improved somewhat since these scans were done).

The first result is reassuring: the concordance between the genotype calls from the companies was excellent, with disagreements at fewer than one in every 3,000 sites. Previous comparisons (see comments on

this article) between 23andMe and deCODEme have found even smaller discrepancy rates, closer to one error in every 25,000 sites - the difference appears to be due to

a substantially higher error rate on the Navigenics platform compared to 23andMe (compared to research-quality typing performed on the same samples, Navigenics had a 0.29% discordance compared to 0.01% for 23andMe). Overall, though, it's clear that the levels of technical accuracy being achieved by the genotyping platforms used by major personal genomics companies are perfectly acceptable.

The real challenge is not with generating the raw genetic data, but rather with converting it into disease risk predictions - and here, the authors argue, the results of the comparison are less than ideal:

[We] found that only two-thirds of relative risk predictions qualitatively agree between 23andMe and Navigenics when averaged across our five individuals ... For four diseases, the predictions between the two companies completely agree for all individuals. In contrast, for seven diseases, 50% or less of the predictions agree between the two companies across the individuals.

The authors note that the discrepancies are primarily due to different criteria used by the companies to select risk markers. Coming up with robust and universal criteria for marker inclusion is something that was discussed in

a meeting of the three major personal genomics companies back in July 2008. In a

Bloomberg article today, 23andMe's Andro Hsu notes that the companies had "a pretty difficult time agreeing" on the criteria, and that uniform standards are "a great ideal, but difficult to implement in practice".

It's worth noting that discrepancies between the predictions made by the companies don't necessarily mean that they are doing something wrong - predicting disease risk from genetic variants is still a new and uncertain field, and there is still plenty of room for valid disagreement on the best approach to use. However, I do agree that there are several areas where the companies could do substantially better, especially in terms of reporting the fraction of risk variance captured by their markers (more on this below).

Venter and his co-authors make a number of recommendations targeted at personal genomics companies and the broader genetics community to improve risk predictions. I've listed these below, along with my comments:

Companies should report the genetic contribution for the markers tested: personal genomics companies generally do a good job of explaining what proportion of disease risk is due to genetic vs environmental factors, but they typically don't spell out what proportion of the genetic disease risk is explained by the markers they test for. I agree with the authors that companies need to do a much better job of making this clear to customers; however, it's also worth acknowledging that this calculation is often non-trivial given the information currently provided in the published literature. This is as much an issue for the broader genetics community as for personal genomics companies.

Companies should focus on high-risk predictions: the authors argue that companies should "structure their communications with users around diseases and traits that have high-risk predictions"; basically, that they should focus heavily on the relatively small number of diseases for which an individual is at substantially above-average risk. This makes sense, so long as it doesn't come at the cost of reducing the availability of information to customers who actually do want to know everything.

Companies should directly genotype risk markers: it is common practice for companies to use nearby, tightly linked markers to "impute" the genotype for a risk marker not present on their chip. The authors note that while this works well on a population level, recombination can result in the new marker making incorrect predictions in a minority of individuals.

I actually don't see this as a major issue: the published risk marker is almost never the actual causal variant, so no matter what you genotype it's likely that a non-trivial proportion of "at risk" individuals don't actually carry the true underlying risk variant; in fact, purely by chance, in many cases the marker chosen by the company may actually be a better proxy for risk than the published marker.

Obviously once we have a catalogue of true causal variants we should ensure that those variants are present on genotyping chips; but until that occurs I don't have a major problem with companies using tightly linked proxies for risk prediction, so long as they are clearly marked as such.

Companies should test pharmacogenomic markers: the authors argue that genetic variants predicting response to drugs will prove particularly useful. I agree, and so do personal genomics companies - given their utility and their interest for customers I have little doubt that these variants will generally be added to the companies' chips as soon as they become available.

Companies should agree on strong-effect markers: several of the largest discrepancies found in this analysis were due to markers used by one company with relatively large predicted effects on risk that fell outside the criteria for inclusion for the other company. The authors suggest that companies need to agree on a core set of large-effect markers; that may prove challenging, but it would certainly be worth considering applying more stringent filters to markers with larger reported effect sizes in order to weed out markers with disproportionate effects of risk prediction.

Finally, the authors make several recommendations to the genetics community. Two of these are very important: there needs to be a strong research focus on

examining whether receiving genetic risk information actually changes behavioural outcomes, and on

performing large prospective studies (that is, studies in which large numbers of people are genotyped and then followed to see if they develop common diseases) to validate risk prediction algorithms. Regarding the latter approach, the US government would do well to heed

David Dooling's recent advice on the need for health care reform to enable such projects to move forward.

The other two recommendations are actually both things that geneticists are already starting to do quite enthusiastically: replicating risk variants in other populations, and using sequencing-based rather than genotyping-based approaches.

In summary: it will be very interesting to see whether this high-profile publication provokes personal genomics companies into tightening up some aspects of their reporting. However, in my view the best thing about this article is that it demonstrates scientists actually engaging constructively with the personal genomics industry rather than jeering derisively from the sidelines (something I've seen all too frequently in recent conference presentations). This sort of engagement is critical if the genetics community wants to influence the way that personalised medicine evolves over the next few years.

Subscribe to Genetic Future.

Subscribe to Genetic Future.

Four scientists - including the omnipresent J. Craig Venter (left) - have penned an opinion piece in the latest issue of Nature based results from five individuals genotyped by two separate personal genomics companies. The article highlights some deficiencies in the way that genetic data are currently used by direct-to-consumer companies to generate risk predictions and to present them to customers.

Four scientists - including the omnipresent J. Craig Venter (left) - have penned an opinion piece in the latest issue of Nature based results from five individuals genotyped by two separate personal genomics companies. The article highlights some deficiencies in the way that genetic data are currently used by direct-to-consumer companies to generate risk predictions and to present them to customers.

Hi Daniel, Awesome review of the paper. Thanks. You mentioned CLIA standards compliance would cause more accurate genotyping accuracy but that seemed to be good news in the study (that the two were accurate). Would CLIA compliance have any impact on improving disease risk prediction from the genotype data?

Hi Mark,

CLIA compliance may have had a positive effect on genotyping accuracy, but I haven't seen anything to suggest that these companies had any worse accuracy prior to meeting the CLIA standards.

And no, as far as I know CLIA compliance would have no effect whatsoever on risk prediction algorithms; it purely relates to quality control of the genotyping procedure itself.

Hi Mark,

thanks for the review! This is indeed a great demonstration of how scientists can constructively engage in DTC genomics. I agree that we need to build prospective studies to evuate the long term benefits of having priviledge risk information. But there is no need to limit that to genomic information, we are in a good position to make personalized risk-profiles based on clinical measures. Has that had a great impact? NO. How long before we reach public health significance....?

Thanks Daniel.

Amusing that Craig Venter - he who sparked the race for preventative patenting of the human genome by the publicly-funded genome consortium - should be calling for DTC testing of the "real" risk markers!

With regards:

The first few studies are in:

http://content.nejm.org/cgi/content/abstract/361/3/245

Disclosure of APOE genotype for risk of Alzheimer's disease.

Green RC, Roberts JS, Cupples LA, Relkin NR, Whitehouse PJ, Brown T, Eckert SL, Butson M, Sadovnick AD, Quaid KA, Chen C, Cook-Deegan R, Farrer LA; REVEAL Study Group.

N Engl J Med. 2009 Jul 16;361(3):245-54.PMID: 19605829

And companion opinion piece:

http://content.nejm.org/cgi/content/full/361/3/298

Effect of genetic testing for risk of Alzheimer's disease.

Kane RA, Kane RL.

N Engl J Med. 2009 Jul 16;361(3):298-9. PMID: 19605835

Just for completeness' sake, I should point out that 23andMe offers three well-validated pharmacogenomics reports: warfarin sensitivity, clopidogrel efficacy, and 5-fluorouracil sensitivity (with more to come). (Full disclosure: I work for 23andMe.)

Another important behavioral paper came out recently on the NIH Multiplex Initiative.

Characteristics of users of online personalized genomic risk assessments: implications for physician-patient interactions [PDF].

McBride CM, Alford SH, Reid RJ, Larson EB, Baxevanis AD, Brody LC.

Genet Med. 2009 Aug;11(8):582-7

I don't see how it's constructive to engage with such work at all. It's plainly obvious that we're no where close to understanding gene/organism/environment interactions as a whole (except for particular simply "broken" genes) as long as the phrase "genetic contribution" still exists.

How much does one transistor "contribute" to the function of a cpu? Put that in a simple proportion and you'll have a patent that will make you the richest person in the world -- right next to the guy who invents a perpetual motion machine.

Until then, this work mostly sits right next to 19th century snake-oil salesmanship -- hey, sometimes even those potions turned out to have an effect on something.

To the extent the science allows it, most of the recommendations are already being used by the freely downloadable Promethease program, which compares DNA data from any consumer platform against the SNPedia wiki of genetic variation.

And since SNPedia is a wiki, consensus among geneticists, genetic counselors and other scientists can be readily seen and applied for the benefit of everyone.

Is this really true? I'm not an expert so I'm not sure, but I find even limited utility hardly comparable to snake oil. Can you back your assertion with evidence?

In related news, TrelawneyDivination Incorporated has announced it is launching a DTC Personal Genomics platform to bolster its current lineup of classic Divination Technologies, including Tea Leaf and Astrological assays.

That many of the claims for DTC are over-stated, does not mean that all of them are wrong.

For example, some of the pharmacogenomics ambitions are beginning to be realised:

Well Known Pairs of Gene-Drug PGx Relationships

http://www.pharmgkb.org/resources/forScientificUsers/well_known_pairs_o…

If you look at the last example, on warfarin dosage, and follow the link you'll see why this information may be of some benefit in averting Adverse Drug Events, as it is used for:

i.e. lots of life-threatening illnesses, but:

So maybe a quick way of narrowing down a non-toxic dose would be useful?

Getting your entire genome sequenced will eventually be cheaper than giving birth at a hospital.

So all this is rearguard stuff is moot.

The medical genomics revolution is here to stay.

While they should be freely available, I think tests for pharmacogenomic markers will for the most part be patented, and Pharma companies may or may not license third parties to do those tests on whoever wants them, and even if they do the collection of license fees might be unaffordable. (the three drugs that 23andMe runs tests for are off patent).

Two scenarios:

1. there's a genetic test to predict whether a drug will cause severe side effects. Your doctor (and the drug company) won't want to rely on a DTC test before prescribing it, they'll want to run their own test.

2. There's a genetic test to predict effectiveness of a drug without severe side effects. Question: would the drug company prefer that you try their drug for a few months before deciding it doesn't work and switching to a competitor's product, or that you just take a DTC test and skip buying their product altogether? Answer: Make the license fee for the test more expensive than several months worth of the drug, if you license the test to third parties at all.

Here's another fun scenario. Imagine you're a drug company that has an approvable drug finishing up Phase III. A competitor already has a drug on the market for the same condition. You have the option of spending $$$ to find a genetic marker for the rare but severe side effects to your drug, and then submitting that to the FDA as well. If you do, the FDA may well approve your drug with the proviso that everyone who is prescribed the drug must be tested first ... whereas your competitor's drug is under no such restrictions. Given the ethics shown by some companies when it comes to conducting and publishing their clinical trials and the marketing of their drugs, my guess is the test's introduction might well be delayed for a few years.

If the "personal" in "personal genomics" means individual rather than the opposite of societally-sponsored, we should also throw this story into the mix:

http://www.genomeweb.com/german-american-team-confirms-cyp2d6-influence…

which reports on:

http://jama.ama-assn.org/cgi/content/short/302/13/1429?home

Schroth, W. et al (2009)

Association Between CYP2D6 Polymorphisms and Outcomes Among Women With Early Stage Breast Cancer Treated With Tamoxifen

JAMA. 2009;302(13):1429-1436

with a tantalising link back to an earlier story from 2006:

http://www.genomeweb.com/dxpgx/fda-panel-leans-toward-including-cyp2d6-…

i.e. if the drug is expensive enough, and the consequences of the wrong treatment dramatic enough, then a genetic test becomes just another routine pre-treatment assessment.

Agreed, you would probably want this done in the sort of lab you could sue if they did it wrong, which rules out the DTC caveat emptor types ...

Technical CLIA doesn't apply to for 23andMe. It has no internal lab and as such CMS has decided not to regulate it as a laboratory under CLIA. But LabCorp, which provides lab services to 23andMe is CLIA compliant. The company began working with LabCorp last year. Either way the validity of the algorithms has nothing to do with CLIA compliance.

It's important to put the reported accuracy in context. While on the face of it a rate of 1 in 3000 (or 25000) seems quite good, if one is making 1 million calls (I rounded up to make the math easier) this means that there would be between 40 and over 300 incorrect calls. If these incorrect calls occurred in one of the Ashkenazi BRCA mutations or CYP2C9/VKORC1 for Warfarin dose(23andME) the impact could be significant. Also some alleles are much harder to correctly to genotype than others, so the errors may not be evenly distributed. It suggests to me that for highly penetrant alleles testing outside the chip might be preferable.

The other issue is that proficiency testing and publication of the error rates should be publicly available for review. CLIA certification doesn't require publication of results, and it does not mandate proficiency testing (allows 'alternative methods' which are not tightly defined).

@Marc

Context is very important with these accuracy numbers. You missed an important factor, however: the distribution of error. If you assume an even error rate across the entire genotyping chip, then yes, 40 errors out of a million could be significant if they landed in important regions like BRCA or CYP2C9.

But that's not the case. Errors are not random. Some probes are better than others. Most of the discordance lies in SNPs that aren't associated with anything, at the moment; probes that have not been scrutinized because they aren't reported on. For things like BRCA, companies like 23andMe take a lot of effort to ensure that their calls are of high accuracy and that people with ambiguity in their data are informed as much.

@Marc again

It was pointed out to me that you actually made my observation in your own post, so my apologies for missing that and erecting a straw man to burn.

The SNPs found in these scans are associative and have no proven bearing on disease outcomes. In other words they are statistical associations garnered from people with certain conditions and certain SNPs. It is better to look at the results as a possible indicator of disease outcomes if bad lifestyle choices have done made. Personal choice is important. Telling someone who has brown eyes they have a high probability of having brown eyes is not much use. Knowing the disease and longevity outcomes of one's extended family is probably more useful than finding out you have SNPs which are associated with Type2 Diabetes.

As a geneticist I don't look at these scans as snake oil. I think the more we know about ourselves, the SNPs included, is all the better. The only problem is the cost, and the lack of cheap Genomic scans.