"There are some things we do much better than computers, but since most of chess is tactically based they do many things better than humans. And this imbalance remains. I no longer have any issues. It’s a bit like asking an astronomer, does he mind that a telescope does all the work?" -Vishy Anand

It used to be no contest. Even if a computer could perform million, billions, or trillions of calculations per second, a game like chess surely got too complicated too quickly for a computer to compete with humans. At least, that's what we used to think, but some things just don't stay the same, as Willy Mason would sing you with his song,

Even after all the time that's passed, it's hard to believe that computers have caught up to human intuition. After all, the number of possibilities for both sides, given a game of chess, is astonishing.

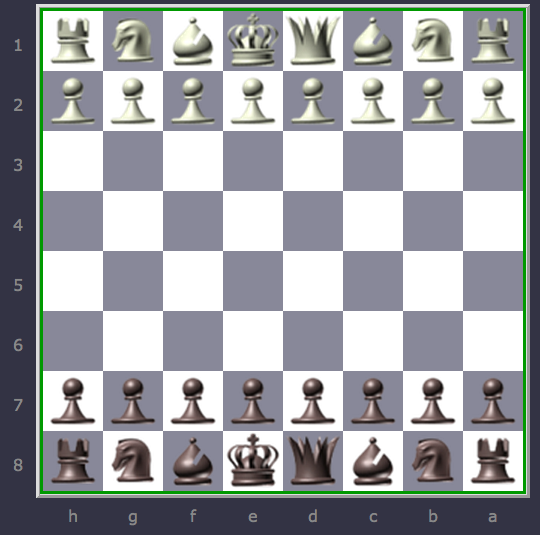

From a starting point on a chessboard, the white player has a choice of 20 different moves, to which black can respond with any of 20 moves of her own. For a computer to look even a few moves ahead, it must consider hundreds of millions of chess positions, a daunting task for any machine and its programmer.

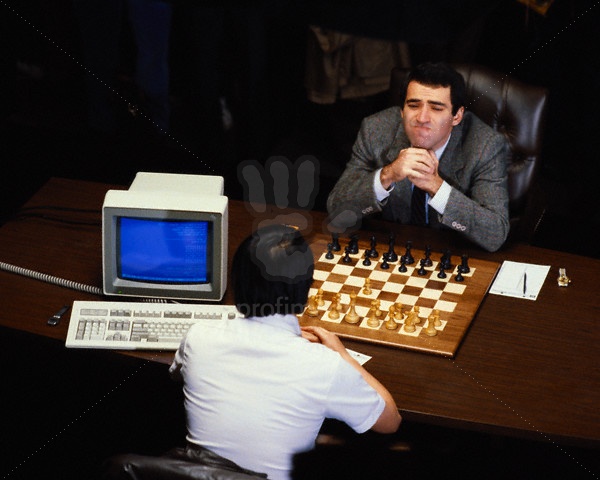

The first big human vs. computer match that I remember came in 1989, when then-World Chess Champion Gary Kasparov took on the best computer ever built in a chess match. The computer -- Deep Thought -- came in as the reigning World Computer Chess champion, and had once found a forced mate 37 moves ahead, a truly amazing feat for a game of chess!

While human improvement in chess has been incremental, however, computers have continued to get far faster, and algorithms for evaluating chess positions continued to improve as well. The tipping point came eight years later.

In a six game match in 1996, Deep Blue -- the successor to Deep Thought -- became the first computer to ever win a game against the reigning World Chess Champion under tournament conditions and time controls. It was the only game won by the computer in the match, which Kasparov won, 4-2.

But in the rematch in 1997, Deep Blue not only defeated Kasparov in a game, but the entire match, by a score of 3½-2½. Since then, computers have gotten progressively stronger. No computer has lost a championship-caliber match against a human since then, and no human has won even a game against a sufficiently strong computer under tournament conditions since 2005!

I'm not so strong as a chess player; I enjoy playing, but anyone even approaching expert or master level will beat me pretty much 99% of the time. If you make a few small mistakes or give away a little bit of material, you can still win if you're playing against me. But a top chess computer will crush you.

Disclaimer: The news story I link to below was apparently an April Fool's joke that I fell for. It seems I'm not the only one. (You'll notice the story was posted April 2nd, 2012 with no disclaimer.) I'm happy to leave this up as proof that even I get fooled sometimes, but you can safely ignore everything past this point.

Amazingly, this now extends as far back as move 3!

The three moves above are the King's Gambit opening, one of the oldest and most storied openings in all of chess. Black typically takes white's pawn, and then white either plays out his knight or bishop, trying to checkmate black.

Amazingly, chess computers are now strong enough to have determined that, with optimal play, black can force a win 100% of the time based on this opening!

This setup of three computers -- with software written by Vasik Rajlich -- is used to control a cluster of about 300 cores, which can also tap in to IBM's POWER 7 cluster, with 2,880 cores. (The same system that IBM's Watson used when it played Jeopardy!)

Image credit: Vasik Rajlich / Lukas Cimiotti, via Chessbase. About 50 of the 300 cores on the Cimiotti cluster are shown here.

Image credit: Vasik Rajlich / Lukas Cimiotti, via Chessbase. About 50 of the 300 cores on the Cimiotti cluster are shown here.

And after a deep, detailed analysis, all of those massively parallel lines, all of the different combinations of moves -- if black plays correctly at every stage -- means that white will lose.

In other words, by choosing to play 2. f4 in just the second move of the game, white has made a mistake. In fact, only by playing the following weird bishop move -- 3. Be2 -- can white even have a chance at a draw in a mistake-free game by black; in all other lines, black can force victory.

That's right: chess computers are powerful enough that -- given just those first few opening moves -- they can solve whether a position is a victory or not for one side. And surprisingly enough, even this early on, a tiny choice like playing the King's Gambit in the traditional fashion -- the way it's been played for hundreds of years -- means that white is lost. You know what's the most interesting thing about this to me? The moves black must play to win, and "bust" white's opening, were correctly theorized over 50 years ago by Bobby Fischer, all the way back in 1961!

Pretty amazing when you think about it, and this is a tremendously powerful demonstration that it's just a matter of time before all of chess is completely solved. It will still be an interesting game for humans, but for computers, it will very likely become just an advanced version of tic-tac-toe (or nuclear war), where no one side can do better than a draw unless the other makes a mistake.

it looks like you were fooled by an April Fool's joke in ChessBase News, which claimed that Rajlich had solved the King's Gambit. I was fooled too, at one point, but ChessBase News does an April Fool's joke every year, and here is where they admitted this one was a prank:

http://www.chessbase.com/newsdetail.asp?newsid=8051

There are a lot of clues in their original article which make it clear that it's a prank... if one reads it carefully!

This blog post is apparently based on the ChessBase News article http://www.chessbase.com/newsdetail.asp?newsid=8047 (2 April 2012)

However, that was an April Fool's joke: http://www.chessbase.com/newsdetail.asp?newsid=8067

The King's Gambit has not yet been solved.

Sigh. Thanks for the info, everyone.

The news story I linked to was apparently an April Fool’s joke that I fell for. It seems I’m not the only one. (You’ll notice the story was posted April 2nd, 2012, with no disclaimer.) I’m happy to leave this up as proof that even I get fooled sometimes, and I'll put a message in the main text -- with links -- to reflect that.

April fools aside, the thing I find fascinating about the whole computers vs humans thing is not that computers can win most of the time, it's how much processing power they need to do it. If you look at the relative joules required for the computer vs the human brain (even ignoring how much of the brain's energy is expended on things like breathing), the human brain wins hands down.

If we limited a computer to the amount of energy that our brains use, I wonder if they could even beat you.

- february 10, 1996, first win by computer against top human

- november 21, 2005, last win by human against top computer

Had I not read this, I would have live without knowing it was an April fool's joke! I read that article last year and I thought it was real. After all, computers are not that frightening.

Let's be clear computers are winning chess; but they aren't thinking.

Starting with deep blue, it is amazing that any human ever wins.

To beat Kasparov, here is what deep blue had to to:

- have a team of programmers, program the rules of chess

- have team of chess grandmasters tell the programmers grandmaster rules of thumb to program into

- program into the computer database every move in every game that Kasporov had every played

- have the team of grand masters analyze kasporov's games for weakness

- have the grandmaster come up with rules of thumbs for play specifically against Kasporov

- have the team of grandmasters develop specific strategies of play against Kasporov

Without all of this programming, which cost IBM a small fortune, the machine didn't have a chance against Kasporov. The idea that any human can stand up against any computer program like this, is to me the great story.

Kasporov with his small team of grandmasters prepared to play with perfect strategy, because you need perfection to play against a machine. He learned that he could never win by psyching out his opponent, nor could he win by knowledge of past games. He could only win by out thinking and out learning Deep Blue.

Because whatever Deep Blue or any chess computer so far today is doing, it is not thinking.

So to me the idea that any human being, can stand up against the collective brain power of dozens of programmers, dozens of grandmasters, and the databases of 1000s of games (today millions of games) and that 1 single human can think enough and learn enough to win even one game against an opponent THAT TO ME WAS THE AMAZING STORY AT THE TIME.

But of course, the press everywhere and even this article pretty much go along with the marketing spin, that the machine has beat the man.

Finally, consider Ethan's statement that:

"and no human has won even a game against a sufficiently strong computer under tournament conditions since 2005!"

Such a statement hides the continually achievement of the world's best grandmasters.

Take the best chess computer/program for 2005 as an example.

How many years did it take for a granmaster to learn to defeat that machine in tourrnament play?

Because if you have to upgrade the computer and the software the next year; if you still want to beat the world's best grandmasters.

Well then, what we have here is a really good teaching machine.

And all of the amazement, in my opinion, still goes to the grandmaster, who as an individual can learn to outthink, out learn, out play the collective intelligence of teams of programmers and chess grandmasters and the databases of millions of chess games.

And that is the real story, few stories are so good at telling specifically how powerful a single human mind, e.g. Kasporov's , is.

The best chess computers/programs/databases are rules based machines NOT LEARNING MACHINES.

Human intelligence is still the only intelligence on planet Earth. And nothing else compares (yet).

And of course, no computer/program/database can beat a GO master yet. The game of GO.

Now that's what I'm talking about.

"And all of the amazement, in my opinion, still goes to the grandmaster,"

- you are absolutely right about that. A computer is just a hunk of metal on it's own (not counting AI's.. and that sort of thing).

What I find fascinating is that we were able to "teach" the computer how to play chess in a first place. In a relatively small amount of time (just couple of decades) we created a bunch of wires and transistors that can play chess. I mean... that's just amazing when you think about it :D

"Not thinking"?

Let me tell you what my artificial intelligence professor told us was the (snarky) definition of artificial intelligence:

Artificial intelligence is anything we haven't taught a computer to do... yet.

The goal posts are always moving. "Computers can't think; if they could think they could do X, but they can't. Perhaps, someday, if they prove they can do X, we can say they're thinking." And then we teach them to do X, and then people pick a NEW X.

"A computer will never be able to beat a strong player at Chess" they said. Done. Oh, okay, then how about:

"A computer will never be able to beat a grandmaster at Chess" they said. Done. Oh, okay, then how about:

Drive a car? Done. (In rush hour traffic and on Mars.) Win at Jeopardy? Done. Pick its own "interesting" scientific hypothesis and test them? Done. Write a song? Write a poem? Paint a picture? Done. Done. Done.

"A computer will never be able to beat a strong player at Go." Not... yet. But soon. There is now probably no intellectual feat at which a computer can't out-perform a bright 13 year old, making them clearly more-intelligent than every creature ever to find itself on the face of the Earth, except for adult humans. Including every dolphin and ever bird; if we'll say those animals are "thinking", why can't we admit that our computers are capable of thought?

(Also, in regards to the article's final sentence, my feeling (and many agree that it is likely) is that Chess isn't a draw game like tic-tac-toe, but a first-player game; i.e., that with perfect play on both sides, white wins.)

OKThen,

I'm not an expert on AI or anything, but I've taken a few philosophy dealing with the subject. The fact is that you really are begging the question of whether or not the computer is thinking.

Computers are actually nothing but rule-based symbol manipulating machines. However, to conclude from this that computers are incapable of thought is serious question begging. At least some philosophers consider human brains to be rule based symbol manipulators, and it's clear that human brains are capable of thought.

The real question is how do we define thinking? Unfortunately, that's not really a question with a truly scientific answer. We might be able to measure brain activity that corresponds to thinking, but that would only tell us what thinking consists of in organic brains. There's no reason to think that a "silicon brain" would exhibit similar measurable activity that would allow us to objectively conclude that it was engaged in thought.

Ultimately, then, it's a philosophical question as to whether a computer could think. You may be right, computers may not be capable of thought. However, it is premature to just dismiss the possibilty that a computer is truly thinking out of hand.

Dale and Sean T

Yes, yes.

Remember, when the automobile was first built; it was great entertainment to see who could go faster the human or the car.

That entertainment of humans chasing cars lasted a couple of years. Dogs are still interested.

Then human interest shifted; how fast the best humans could drive the best cars.

For several decades now, there has been great interest interest in the competition between the best chess computer engines and the best human grandmasters. The fact that humans are still challenging the best chess engines is amazing. We gave up so quickly on cars.

Here's a discussion about the world's best chess engine Houdini versus grandmasters.

http://www.chess.com/forum/view/general/humans-v-houdini-chess-engine-e…

All that being said, one day, just as any cars can move across a flat surface faster than any human; all computers will be able to play a standard game of chess better than any human.

But if the challenge is cross country racing with a few significant obstacles; then the best race car (robotic or with a human driver) is soon in the ditch.

Likewise if we get off of the smooth chess surface (of the standard chess game) and move to rougher terrain( e.g. Fisher-random chess in which the positions of the main pieces is randomized with bishoips maintaining opposite color); in such a rough terrain chess game no computer has a chance against any grandmaster. Because the grandmaster with one glance at the board and the new arrangement of pieces adapts instantly; whereas the best chess computer in the world can not adapt. Just as a racecar cannot adapt to a ditch.

But of course, even this challenge will eventually be mastered by every computer with a chess program.

But what we have really is experts (i.e. grand masters) helping to program computers; then in term grandmasters learning from those computers. i.e. a grandmaster or anybody can now play a dozen grandmaster level games in an afternoon.

The chess computer is the granmaster's tool just as surely as the race car is the race driver's tool.

But long after, we have gotten bored with the idea of human's racing automobiles to see who wins; and long after we have gotten bored with the idea of human's playing chess against computers to see who wins; we will still be excited by humans versus humans running or playing games, in a way that we are NOT excited about robotic machines (machines without human drivers) versus robotic machines whether cross country racing or playing chess.

The importance of a google car driving safer than me is that I can read a book or my tablet or sleep or play a game with my son.

And the simple games that I play with a child have ever changing rules and even new words that a chess computer would have no idea how to invent or adapt to; but that a child can invent and adapt to instantly.

That being said, what I envision long before we have senseint machines is yes; helper and function machines (e.g. trading computers and autopilots and etc...) ; but what I really envision is teaching machines.

And we have some of those early teaching machines today. And these teaching machines (e.g. the ipad with reading, math, drawing, music, chess,, etc..programs) teach adult and children humans. But they can not teach a chess computer, especially the world's best chess computer; because such machines have a very limited repertoire of learning modes, compared to a child.

So yes, use of computers/programs/databases is every thing is pervasive, even insidious. But no computers are yet thinking.

Now when the computer virus's start evolving on their own; for their own purposes. When the chess computer programs start learning on their own even for the purpose of chess.

Let's take the world top two or three chess computer's and have them play against one another for a year. Are they significantly better after a year? Have they learned any new insight in some complex position that they can teach a grand master? Has their been an ahha?

Artificial intelligence is powerful. And I certainly want to have the best machine/program/database tool on a particular subject matter or activity in my pocket or as a tool (e.g. vehicle).

But so far, I know of no machine that has been allowed and able to develop it's own humble interest.

I mean a thinking chess computer; might learn to develop a new skill or interest on its own (e.g. a sense of humor). And maybe such a chess computer might try to force the play of a chess game against an international grandmaster into the play of an exact historic game for example as one between Bobby Fisher and Boris Spatsky and halfway through such an insightful game; the international grandmaster would get the joke and laugh. Haha ha. The machine could have beat me here and here but it's pretending it's Bobby Fisher.

I certainly would like to have such a companion. Of course, I will probably have such a computer/programmmed/database companion assistant and it will make such jokes; but not because of an computer inspired insight but because it has been programmed by a human for human humor.

Still that will be excellent.

But when will machines start making machine jokes between one another that you and I and even the GREATS don't understand. Well then...

Of course, then all discussions change. I mean a sustainable planet Earth for humans is a very different thing than a sustainable Earth for machines.

Back to basic our current non thinking machines. The model of the intelligent machine is the BOOK. Yes the book is a machine. On my book shelf these books tell me the wisdom of Socrates, the humor of Aristophanes, the brilliance of Leonardo, and all the greats.

The BOOK is an intelligent machine. But so to is the WHEEL an intelligent machine. Each captures and transmits a certain kind of human intelligence and thought.

OK Then,

I think maybe I wasn't clear. By no means do I think that the current chess-playing computers are actually thinking. I just cautioned about dismissing the idea of AI out of hand. You correctly point out that these chess programs require input from grandmasters, for instance. Perhaps therefore, they are not the best examples. Consider instead the state of the art in backgammon playing computers. They certainly aren't as advanced in their level of play as chess computers are; a top-notch backgammon player can do pretty well against the best programs. However, the manner in which they learn (yes, this word is appropriate) to play the game differs greatly from the chess computers.

Top level backgammon programs start out knowing almost nothing about backgammon (there are a few bear off databases and the like pre-programmed). These computers play games against themselves. Each legal position potentially encountered is assigned a weight, with that weight being increased each time that position leads to a win and decreased each time it leads to a loss. Given a choice of moves, the program chooses the move with the greatest weight (or chooses randomly among positions with the same weight).

To begin with, all legal positions have equal weight. The computer then plays games against itself, with the weights changing as games are completed. Initially, it makes random moves since all positions are weighted equally. However, some positions are stronger than others, and over large numbers of games, the weights of the stronger positions increase, making the computer more and more likely to reach these positions. Similarly, weaker positions tend to see their weights decrease, making the computer less likely to make moves that result in these positions. The practical upshot of this is that the program essentially teaches itself to play backgammon without any input from outside (other than a few items that are already pretty well solved, included mainly to reduce the training time of the program.)

It's much less clear to me that such a program cannot be said to be thinking, at least within the limited domain of the game of backgammon. It's also not clear to me that human learning doesn't occur in much the same way. We do learn much by trial and error. The assignment of numerical weights to situations seems to me to be just a formalization of the process of learning through trial and error. Is there really a difference in principle?

Just to add to what I posted above, it is true that top level backgammon players still beat the best programs, but some of that might well be attributed to the fact that these players have adapted their game to what has been learned from the programs. Top level players have changed how they play based on these programs. It's not a matter of adapting their games to beat the computers; the way that they played prior to the development of them was objectively wrong! They actually learned how to play a better game of backgammon from studying the computer program.

At least within this limited domain, new information was generated by a computer, therefore. This was done without prior input from experts in the field. Where did this information come from if the computer really isn't intelligent?

"Kasporov with his small team of grandmasters prepared to play with perfect strategy, because you need perfection to play against a machine."

One of the most interesting moments in the Deep Blue vs Kasparov re-match was when Kasparov deliberately played a move that was not perfect from a neutral strategic standpoint, but was rather designed to trip up the computer by falling outside its expectations. And it worked. One of Kasparov's strengths was that he understood how computers 'think'.

Unfortunately this lead to a weakness -- knowing how previous computers thought, but not one like Deep Blue. When DB played a move that seemed to require human-levels of intuition and was very unlike what a computer would normally play, Gary called shenanigans.

All around a fascinating episode in human history.

One of the first things my AI professor told us (in his introductory lecture titled "It's 2001 -- Where's HAL?" was to think of "AI" in two broad categories: "Strong" and "weak" AI. "Strong" AI is HAL. "Weak" AI is any decision-making system that takes into account environmental input. At some level, your thermostat is an AI.

The point of teaching us this distinction was to prevent us from getting hung-up on the search for HAL. What we would be learning were algorithms for producing practical, useful AIs. Algorithms that have been very successful at their tasks, and indeed much like Deep Blue often surpassing the humans who used to perform them. So, you know, if you want to try to code up HAL then feel free, but if you want to accomplish useful tasks then the question of whether "Strong" AI is possible is not necessarily relevant.

However, the thing is, it's not clear that there really is a difference except in terms of human perception. It's not clear that what the brain does and what "weak" AIs do is actually all that different. The main difference is that you can see and understand (if you're a programmer) every single step going on in the computer's "thoughts", while with a human (even if you're a neurologist) you can't.

At some point, if we make our "weak" AI algorithms and computers strong enough, put enough of them together that they can make "intuitive" leaps like Deep Blue already did at least once, and like Watson does constantly, then at some point you have to ask if whether it "really" has intuition or if its algorithm just happens to pick surprising answers sometimes has any meaning. And whether that distinction means anything. If we eventually discovered that human intuition was basically the same thing, would that mean we had ceased to "really" think?

The answer to the Chinese Room is this: No, the man inside the room doesn't know Chinese. However, I would argue that the system as a whole does.

@Sean T.

"Computers are actually nothing but rule-based symbol manipulating machines. "

And the human brain is...?

Ha! It's my point but in 5 words instead of a wall of text. Kudos to you.

James,

I believe if you read further in the post that you quoted, you will see that I also mentioned that brains might very well also be rule-based symbol manipulators.

If you level the playing field, can computers match human (chess) performance?

Neurons have a maximum firing rate of 1000 cycles per second. Very few neurons will actually operate in that range, but for simplicity I'll take that as criterium for a 'level playing field'.

As we know, today's processors operate at GigaHertz frequencies. Chess software is very much dependent on brute force. A chess computer limited to a clock frequency of 1000 Hertz would not make much of a dent.

Clearly, the way that computers process information is profoundly different from the way humans process information. In the case of chess what is produced is chess moves, but the information processing to arrive at the chess move is different for humans and computers.

About the information processing:

For the chess computers, their brute force capability is not enough. Even a computer cannot evaluate all possible positions. In many positions there are only two or three worthwhile moves. Ideally the software should be able to discard all moves that are not among the few worthwhile moves. I will refer to that as 'pruning the tree'.

Grandmaster John van der Wiel had a reputation of doing well against computers. He described that in order to do well against a computer you have to play a kind of game that you wouldn't play against a human. You must play for positions where in general the progress of play is extremely slow, and where there are all the time many, many moves that are equally worthwhile.

In positions like that the tree-pruning algorithms performed poorly, and at the same time the tree pruning was needed most. (I assume this weakness was overcome with increased brute force capability.)

Interestingly, in the game of Go the top Go computers are still not a match for the top human players.

And the reasons for that, as I understand it, are related to the type of play that was the best kind of play against chess computers.

In Go every position has many potentially worthwhile moves. Clearly, humans are efficiently pruning the tree of possible moves, otherwise they would not be able to play at the level they play. But for developers of Go playing software it has proven very hard to develop good tree pruning.

Considerations like have convinced me that the way computers process information is profoundly different from the way humans process information.

Yes, yes, yes. And yes Strong versus weak AI.

"It’s much less clear to me that such a program cannot be said to be thinking, at least within the limited domain of the game of backgammon. " Really.

Hmm, what about a robot vacuum cleaner with the chips and programming to learn the obstacles and vacuum an apartment. is such a vacuum cleaner, ipad or car thinking? For sure it is handling information.

But back to the title of this article Chess is Almost Solved!

Hmm, probably yes.

"Hmm, what about a robot vacuum cleaner with the chips and programming to learn the obstacles and vacuum an apartment. is such a vacuum cleaner, ipad or car thinking? "

Thinking about vacuuming, or showing you cat videos on youtube, or the timing of your spark plugs? Yeah, personally I'd say it is.

Just like an angler fish thinks about catching the shrimp that's been attracted to its lure.

Neither (probably, in the latter case) are consciously thinking about the fact that they are thinking about accomplishing the task. There's a certain meta-thinking you seem to be referring to, the thing we call consciousness or self-awareness. While it's obviously difficult to say for sure, we've only identified a select number of animals who display it.

So the fundamental problem is this: Between the simplest of organisms with a central nervous system to human beings, tell me where the behavior of that nervous system becomes "thinking", and where it becomes "consciousness".

Now, assuming you have denied one or both of those titles using to the simplest brain, you must then observe that using the same fundamental cellular machinery -- just much more of it and arranged with greater complexity -- you can get all the way to an obviously-thinking-and-conscious brain.

Why not so of a computer? What's different about the form of "information handling" done by a neuron that when arranged in the correct fashion in sufficient numbers it produces thinking and consciousness?

At some point our "weak" AI may become sophisticated enough and built into complex enough ensembles that it gives the semblance of conscious thought. And yet we may still understand it fully as a sequence of instructions programmed by the computer. So what? If we understood our brains at that level, would that mean we weren't thinking all this time?

Thinking of a robotic vacuum cleaner as a kind of gigantic bacteria or a gigantic human cell, then if I organize a 110 trillion of those robotic vacuum cleaners in the same way that a living human body is organized with its 10 trillion human cells and its 100 trillion bacteria symbiotic orgnisms; then that configuration of 110 trillion robotic vacuum cleaners might attain consciousness or NOT.

Well that's obviously going to depend on how you wire them up, and how each one is programmed.

And so with a pile of cells.

What's the difference? Why is the organic machinery special?

Hmm, ethan fooled yet again....its getting pretty boring

@OKThen,

I don't want to put words in your mouth, but it sounds to me like you are taking the position that organic brains have some characteristic, property, etc. that allows them to engage in mental processes, and that furthermore, non-organic "brains" (such as computers), cannot possess this faculty. If that's true, then it's your right to hold this belief. I cannot argue against this except to ask you what this special property is. It cannot be complexity of organization. In principle, it's certainly possible to arrange "artificial neurons" in arbitrarily complex fashion. Are you really arguing that an arrangement of such artificial neurons that duplicates the arrangement of organic neurons in a human brain would not be able to engage in mental activity, up to and including consciousness? If so, then I'll have to respectfully disagree with you without further argument unless you can tell me what it is about the human brain that is fundamentally different from the functionally equivalent arrangement of artificial neurons.

OKThen,

Just to be clear: I am arguing that the factor that causes organic brains to have the ability to engage in mental processes is the enormously complex arrangement of neurons. Whether it's possible in practice to arrange artificial neurons in such a complex arrangement as to produce mental states is an open question in my mind. I do believe that it's possible in principle, however.

Again, though, I think you've begged the question. I have given you an example of a computer program that requires no external input of information (other than the formal rules of the game of backgammon). No expert backgammon players assisted in programming the computer. The only inputs, which weren't even strictly necessary, were well-understood and calculated items, such as bear off data bases. These really only served to speed the learning process; the program would have been able to develop these itself. The program ran, as I described before, and taught itself to play a very strong game of backgammon. Its game was so strong that expert human players, who have studied the game for years, have changed the way that they play the game against other humans because of what the computer programs have taught them. Why does this not qualify as thinking, at least in the restricted domain of the game of backgammon?

CB

"It’s not clear that what the brain does and what “weak” AIs do is actually all that different."

Well I disagree. So let me explain my opinion.

All AI machines today, are imbedded with human intelligence.

Sometimes that intelligence is tacitly imbedded, e.g. the wheel.

Sometimes it is explicitly imbedded, e.g. a book, or a chess program.

In my opinion, all AI by definition is weak AI; because it is fundamentally very cautious and controlled.

When we are talking about HAL and computer thought, when we are talking about Artificial Consciousness; we are talking about something revolutionary, incendiary, uncontrollable.

Consciousness is not limited to the carbon based life forms. Perhaps there is consciousness of different sorts across the cosmos (we just don't know of it yet); perhaps we can facilitate the emergence of artificial consciousness in computer programs (we just haven't done it yet).

But, in my opinion, wiring together a bunch of robotic vacuum cleaners has zero chance of accomplishing the development of Artificial Consciousness; because the unit (i.e. the robotic vacuum cleaner) is missing some essential properties necessary for consciousness.

Consider the spectrum:

stone virus bacteria colony multicellular organism human

In my opinion, the virus already has some essential ingredients not only for a living organism; but for a conscious organism.

A virus not only self replicates exponentially; it also extensively mutates and those mutants replicate exponentially or die. This prolific originality is, in my opinion, essential for the emergence of thought as well as the emergence of life.

In my opinion

Before we can have conscious thought

There has to be unconscious thought

Before unconscious thought

There has to be life

Before than can be life

There has to be self

This applies to carbon based consciousness and to artificial consciousness.

So where in the state of our current information technology should we be looking for an artificial proto-self ? An artificial proto-organism? And hence an artificial proto-thought?

In my mind, the computer research that has the best chance of spawning artificail life and hence artificial thought is what I call:

the computer virus (i.e. hackers) versus computer operating system wars (i.e. information technology organizations)

Clearly the ideal virus not only replicates exponentially and effectively disrupts; it continually mutates explosively to outmaneuver computer operating system defenses and offensives.

Clearly the ideal operating system not only is actively defensive; it is preemptively offensive in order to maintain its self integrety (i.e. continual function) against known and unknown threats.

Thus both the ideal computer virus and ideal computer operating system must be capable of mutation.

How disruptive are virus programmers/hackers willing to be (e.g. in North Korea, China, Iraq, CIA, Mafia, some revolutionary movement)? In my opinion, a lot more than the designers of computer chess and vacuum cleaner programs.

In my mind, current computer viruses are misnamed; they are more aptly named proto-computer viruses; because they do not massively mutate like organic viruses.

Our current computer virus's are quite mild mannered and cautious creations, compared to a fully functioning computer virus that could not only replicate and disrupt; but also mutate and evolve in the wilds of the internet environment.

Nor are our current computer operating systems like living cells or organisms; their systems of survival (implicit values) are not self organized like a roses thorn.

It is designers' values that are imbedded in today's computer virus's and computer operating systems. It is not the computer virus's and computer operating systems themselves which are trying diligently to outwit, outmaneuver, out-mutate and out-evolve each other.

Be that as it may, in my opinion, the hackers and operating system programmers are at the forefront of artificial life and artificial intelligence research.

The progression, in my opinion, will be:

- artificial proto-self/proto thought (e.g.current computer viruses, current state)

- artificial self (e.g. a self evolving computer virus, current research)

- artificial life (e.g. a self evolving computer operating system, current research)

- artificial uncounscious thought (e.g. self interested artificial predator/prey survival struggles by millions of species of artificial bacteria, plants, jellyfish, insects, etc invading an internet type environment).

- artificial conscious thought (e.g. self interested Genghis Khan versus self interested artificial Socrates our own living operating systems.)

In think a predatory artificial computer insect capable of some kind of unconscious thought(i.e. survival instinct) is much more dangerous to human existence than an artificial biological GMO talking companion dog.

So biological cells, organisms and thought are not special.

Nor are they well understood.

The hacker versus operating system war of development of artificial life and artificial thought is well underway and likely to escalate; because cyberwar to develop artificial thought is well invested as a matter of greed and politics.

I personally hope the gap between artificial intelligence and artificial life, unconsciousness and consciousness is much wider than science fiction suggests.

replace with:

"Be that as it may, in my opinion, the hackers and operating system programmers are at the forefront of artificial life and artificial THOUGHT research."

So if I understand, your opinion is that consciousness can only occur as a consequence of evolution. And the reason the machinery of cells can be the components of a conscious mind but the machinery of a Roomba cannot is because the former were evolved.

Now, there's two ways I can take this.

One is that it isn't the structure that matters, it's how the structure came to be, that defines its ability to be conscious. A machine designed and a machine evolved could be exactly the same, but only the latter could be conscious. That's obviously nonsense, so I'll assume this was not your meaning.

The other is that you're just saying that the algorithms and patterns necessary for producing consciousness cannot be discovered or invented through traditional means, but only created as an emergent behavior of evolutionary processes.

Well first, that's fine, because Genetic Algorithms are a great way to connect up our collection of Roombas. It'd be my preferred way to do it, in fact. Computer "virus" development is not actually much like biological evolution at all. But creating populations of code, testing the result, propagating the successes with mutation and crossover and deleting the rest, is evolution, and we can do it faster than all the hackers employed by the Russian mob. So... why can't Roombanet be sentient again?

Second, let me ask the exact same question as before only instead of about the machinery it's about the algorithm implemented by that machinery: What's so special about the algorithm implemented by a conscious mind that it's impervious to discovery, reverse-engineering, or independent invention? If we rooted out the fundamental pathways that make humans "conscious", why could those not be re-created in an electronic analog? Why is evolution the *only* way to achieve this?

It's all well and good to declare that only life can lead to thought can lead to consciousness. It makes for nice poetry. Also excludes anything a computer does as being "thought" by definition. But how do you justify believing that is actually so?

"If we rooted out the fundamental pathways that make humans “conscious”, why could those not be re-created in an electronic analog? Why is evolution the *only* way to achieve this?"

Well consider http://www.scientificamerican.com/article.cfm?id=c-elegans-connectome

The 302 neurons of the nematode C. elegans' nervous system were mapped and published in 1986. Twenty seven years later; no one has been able to re-create c. elegans "simple" nervous system as a dynamic functioning artificial unconscious nervous system computer program.

Nor do neuroscientist know how c. elegans “unconscious” nervous system of 302 neurons works.

"So far, C. elegans is the only organism that boasts a complete connectome... (but)... critics point out that the C. elegans connectome has not provided many insights into the worm's behavior... that connectome by itself has not explained anything... a lone connectome is a snapshot of pathways through which information might flow in an incredibly dynamic organ, it cannot reveal how neurons behave in real time, nor does it account for the many mysterious ways that neurons regulate one another's behavior. Without such maps, however, scientists cannot thoroughly understand how the brain processes information at the level of the circuit."

"Re-creating" the 100 billion neurons and the 100 trillion synapses of the human brain (or even an small subset of such) "in an electronic analog"; seems unlikely since we can't re-create even 302 neurons and 7,000 synapses of c. elegans as a dynamic artificial unconscious computer nervous system.

I will be very impressed if/when c. elegans is re-created as an artificial unconscious computer program nervous system.

So since artificial unconsciousness has not been programmed; talk of re-creating human consciousness in a computer program seems premature.

On the other hand:

"Artificial life researchers have studied self-replicators since 1979 and the first autonomously mutating self-replicating computer programs were introduced by Ray in 1992... As opposed to computer malware artificial life systems are squarely aimed at the research environment... By using an evolutionary function, computer malware could.." arXiv:1111.2503

"Fourth generation viruses use simple armoring techniques such as encryption... Fifth generation viruses are self mutating. They infect other systems with other versions of themselves." But this is not autonomous self mutation yet.

Fortunately autonomous self mutation of computer programs seems very difficult if not impossible in current computer environments.

So either way, I don't expect artificial consciousness soon.

Of course chess is a game (i.e. simulation) of civilized aggression (i.e.war)

This 6 page article pretty much sums up the state of cyber warfare.

http://www.vanityfair.com/culture/features/2011/04/stuxnet-201104

"Stuxnet is like a self-directed stealth drone: the first known virus that, released into the wild, can seek out a specific target, sabotage it, and hide both its existence and its effects until after the damage is done. This is revolutionary... Stuxnet is the Hiroshima of cyber-war. That is its true significance, and all the speculation about its target and its source should not blind us to that larger reality. We have crossed a threshold, and there is no turning back."

Not really sure what chess has to do with computer viruses.

And thanx for the article on Connectome. Didn't know about this study before.

But something is missing in order to be able to replicate an "inteligence" of the worm. Let's use the computer analogue.

What they are doing in mapping all the wires. And this is an importan 50% of the job. But like the article says, it alone is not enough. And that's perfectly logical. If you replicated all the wiring of a computer, it still wouldn't work. What's missing?... the software. The basic one. The assembler. Some code, that tells the hardware how to work. And this, IMO, comes from DNA, or RNA in case of worms I guess.

So without an artificial equivalent to DNA, wires alone won't do it.

Sinisa

Just a stream/circle of thought

chess solved - chess programs - to AI - Artifiicial thought - viruses approaching artificial life - cyberwar (hmm chess is simulated war, the ultimate simulated war programs aren't chess engines, they are cyberwar programs)

"If you replicated all the wiring of a computer, it still wouldn’t work. What’s missing?… the software. "

Thanks for hitting me over the head with the obvious.

Amazingly, this now extends as far back as move 3!

Thirty-five comments in, and I'm apparently the first to point out that at least one of those branches was solved a long time ago, without the aid of computers. After 1 g4 e5 2 f3 Black forces a win with 2 ... Qh4 mate. It's called the Fool's Mate. (If you prefer the old-fashioned descriptive notation, it's 1 P-KN4 P-K4 2 P-KB3 Q-R5.)

"Thanks for hitting me over the head with the obvious."

No.. I was just thinking aloud. Wasn't meant as anything offensive.

OKThen, the problem being difficult is not the same as it being impossible. I have not and do not intend to claim that Strong AI is imminent. I do claim that it is possible, and that there is absolutely no reason to believe that it isn't. You've claimed that it is, but that we haven't figured it out YET isn't evidence that this is so.

What is it about the operation of the worm's nervous system that you feel is utterly inscrutable and immune to human understanding?

The computer virus papers you refer to demonstrate what I'm saying: They are not much like biological life at all, and other parts of computer science, genetic algorithms in particular, are far ahead in that regard with virus makers maybe thinking about picking up some of it. But it's not just hard to have fully random self-mutation in the wild, it's generally deleterious to the purpose of the virus. Most random changes are detrimental, and particularly in a software program will cause the program to stop working entirely. If your goal is to infect the largest number of computers possible, then having successfully installed a virus on a computer only for it to randomly disable itself is counter-productive. That's why they've only had "mutations" in a very limited sense to try to fool anti-virus pattern matchers. Actually "evolving" new functionality isn't necessary and isn't worth it.

In the lab, though, you can throw away millions of generations of entirely negative mutations with no benefit just so that you find one that pushes you closer to an ideal solution.

The part that interested me is so much more boring.

I got taught in the game theory: Chess is a finite game. There's a theorem that says it must have a value, in the technical sense. This means that either white should be able to win, or black should be able to draw, but unlike tic-tac-toe, we don't know which of those holds. This was always a cute result for me.

So how much calculation would it take to figure that out? I'm kinda thinking the answer is "a ridiculous amount".

@rook,

Well, to brute force calculate it, you'd have to analyze every possible legal position that could arise during a game of chess. Each player has 20 possible legal moves for the first move. That number will change as the game progresses, but for simplicity, let's just take 20 as the number of legal moves available at any given point in a game.

Now, There are 20x20 = 400 legal positions to be analyzed as a result of each players first move. This number grows rapidly as the game goes on. For instance, after 20 moves by each player, it's on the order of 10^52 positions, so yeah, I'd say there's a ridiculous amount of calculation needed to determine whether chess is a win for white or a draw when played optimally. It's almost certainly an intractable problem for current computing technology.

Sinisa

You are never offensive.

Hitting me over the head with an idea is a compliment. Thank you for pointing out the obvious to me.

Well --- I fell for that one, too, when it was originally published... But as a Computer Scientist, I should have been deeply sceptical as to the validity of the original report, but alas, I was completely fooled... Good one, Chessbase...

Oh, and I should add: To my knowledge, the most sophisticated game solved so far by a computer, is apparently Checkers (which is an astonishing feat!): http://webdocs.cs.ualberta.ca/~jonathan/

As for chess, well... Even taking Moore's law into account, I don't believe I will see the game of chess being solved in my lifetime (I'm in my thirties). But, please: Prove me wrong :)

in second pics the board is not correct :)

Artificial Intelligence will remain principally impossible without self-reference!

German philosopher and mathematician Gotthard Günther developed the only feasible approach: polycontextural logic. It is officialy said that Günthers research was stopped due to the Vietnam war and lack of funds. Nothing could be farther from truth. Rumors say that his research was classified as top secret after it had already yieled practical success (I remember reading about some architectural software which was able to solve a complex statical problem).

http://www.thinkartlab.com/pkl/archive/Cyberphilosophy.pdf

Chess and Backgammon: The Lambda-T-algorithm (e.g. used by Jellyfish) has surpassed the human world champions. In fact, experts changed some of their opening moves hirarchy according to the neuronal networks' new evaluations.

However, chess and Go are lacking dice "noise", therefore Lambda-T seems not suitable for them. Otherwise, I believe, the neuronal network could be used to prune search tree in new ways to extend search depth considearbly.

Of course, a NN can't explain its decisions, that's a big drawback. However, with Gotthard Günthers approach, a true expert could be built which know the why. Polycontextural logic is infusing consciousness into matter! Think about it. As machines will challenge the human domain of reasoning, humans will be forced to take the next step in evolution, to distinguish themselves from machines, that means: discover their own true divinity and creator-likeness.

Philip