![]() Have you ever looked at a piano keyboard and wondered why the notes of an octave were divided up into seven white keys and five black ones? After all, the sounds that lie between one C and another form a continuous range of frequencies. And yet, throughout history and across different cultures, we have consistently divided them into these set of twelve semi-tones.

Have you ever looked at a piano keyboard and wondered why the notes of an octave were divided up into seven white keys and five black ones? After all, the sounds that lie between one C and another form a continuous range of frequencies. And yet, throughout history and across different cultures, we have consistently divided them into these set of twelve semi-tones.

Now, Deborah Ross and colleagues from DukeUniversity have found the answer. These musical intervals actually reflect the sounds of our own speech, and are hidden in the vowels we use. Musical scales just sound right because they match the frequency ratios that our brains are primed to detect.

Now, Deborah Ross and colleagues from DukeUniversity have found the answer. These musical intervals actually reflect the sounds of our own speech, and are hidden in the vowels we use. Musical scales just sound right because they match the frequency ratios that our brains are primed to detect.

When you talk, your larynx produces sound waves which resonate through your throats. The rest of your vocal tract -your lips, tongue, mouth and more - act as a living, flexible organ pipe, that shifts in shape to change the characteristics of these waves.

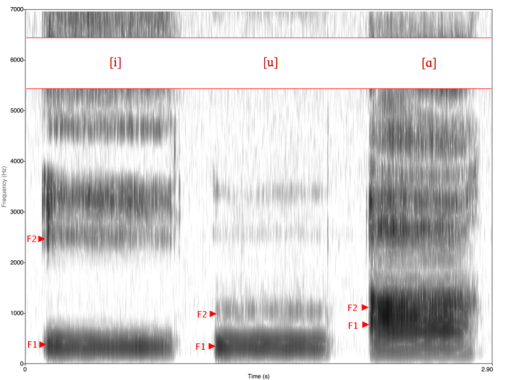

What eventually escapes from our mouths is a combination of sound waves travelling at different frequencies, some louder than others. The loudest frequencies are called formants, and different vowels have different 'formant signature'. Our brains use these to distinguish between different vowel sounds.

The first two formants, the ones with the lowest frequencies, are the most important. The brain pays particularly close attention to these and uses them to identify vowels. If they are artificially removed from a recording, the speaker becomes impossible to understand. On the other hand, getting rid of the higher formants does no such thing.

(This spectrogram shows the different frequencies that make up three different vowels. Frequency goes up the vertical axis. The darker the image, the louder that particular frequency is. For each vowel, the first two formants (the lowest dark bands) are marked.)

Despite the wide variety of sounds in different languages, and the even greater variety in people's voices, the formants of their vowels fall into narrow and defined ranges of frequencies. The first one always has a frequency of 200-1,000 Hz, while the second always lies between 800 and 3,000 Hz.

Ross analysed the formants of English vowels by asking 10 English speakers to read out thousands of different words and some longer monologues. Amazingly, she found that the ratio of the first two formants in English vowels tends to fall within one of the intervals of the chromatic scale.

When people say the 'o' sound in rod, the ratio between the first two formants corresponds to a major sixth - the interval between C and A. When they say the 'oo' sound in booed, the ratio matches a major third - the gap between C and E. Ross found that every two in three vowel sounds contain a hidden musical interval.

Her results didn't just apply to English either. Ross repeated her experiments with people who spoke Mandarin, a vastly different language where speakers use four different tones to change the meaning of each word.

Even so, Ross still found musical intervals within the formant ratios of Mandarin vowels. The distribution of the ratios was even similar - in both languages, an octave gap was most common, while minor sixth was fairly uncommon.

Ross believes that these hidden intervals could explain many musical curiosities. For example, the musical preferences of a certain culture could reflect the formants most commonly used in its language.

Ross believes that these hidden intervals could explain many musical curiosities. For example, the musical preferences of a certain culture could reflect the formants most commonly used in its language.

Hardly any music uses the full complement of 12 semitones, and European music usually limits itself to just 7 - the so-called 'diatonic scale' represented by a piano's white keys. Music from other parts of the world tends to use the 'pentatonic scale' where the octave is split into just 5 tones.

Ross found that the 70% of the chromatic intervals in her data were included in the diatonic scale, and 80% were found in the pentatonic one. She reckons that these scales are so widely used because they reflect the most common formant combinations in our speech.

She now wants to see if the link between formants and intervals can explain why music in a major key instinctively sounds happier and more upbeat than music in a minor key.

Formants are common to the vast majority of languages and cultures, which explains why the twelve-semitone chromatic scale is so universal. Regardless of our cultural differences, it is heartening to realise that in some ways, we are all the same.

Reference: Ross, Choi and Purves. 2007. Musical intervals in sounds. PNAS 104: 9852-9857.

"Hardly any music uses the full complement of 12 semitones" - what music does?

very interesting.

I do see where this effort succeeds. It is more likely that the sounds of our languages and the sounds of our music follow similar rules because in both cases they sound good to us.

Does the question need an answer? What sounds good to us tends to be combinations of frequencies in certain ratios--ratios where interference is avoided. That interfering sound combinations would sound bad seems a natural.

Technically, diatonic scales can start on any note, and therefore could incorporate any or all of the black keys as well.

But it's a very interesting result, because it links arguably the most abstract of the arts, music, to something concrete. Also, while most cultures use notes close to the Western chromatic scale, often the established tunings are slightly shifted, so that a tone might be a fraction sharp or flat from our perspective yet be perfectly in tune for that culture. It would be interesting to know if those kinds of variations were also found in the language.

culturally I don't know of any that do but many composers such as Arnold Schoenberg, Alban Berg, John Cage and Anton Webern composed 12 tone music.

On the West Coast the Kwakiutl natives created music that used quarter tones. I remember a lecture in an art history class back around 1970, by someone visiting from the UBC Anthropology department, who brought in a collection of Kwakiutl flutes and recordings of elders playing them. It was like listening to something completely alien as culturally we are not conditioned to "hear" those quarter tones as music.

That's really interesting and I'm looking forward to the results of her research on major and minor scales. This goes along with a linguist who taught language by focusing on the musicality of the particular language, ie the rise and fall of the voice in conversation. His students were much more comprehensible to native speakers than other 2nd language speakers.

Very interesting. I read a study once a long time ago about the use of major and minor keys, and the preference of either seemed to have a correlation with the listener's age... I wish I could remember where I read about that! :)

Technically, diatonic scales can start on any note, and therefore could incorporate any or all of the black keys as well.

This is because (at least in Western music) we use even-tempered tuning. Two notes which are N semitones apart have a frequency ratio of 2^(N/12). Prior to J. S. Bach's time (and this may still be true of some non-Western cultures), music usually used natural tuning, in which the frequency ratios were ratios of small integers: 2/1 for the octave, 3/2 for the perfect fifth, 4/3 for the perfect fourth, 5/4 and 6/5 for major and minor third respectively, etc.

For the so-called perfect intervals there is essentially no difference, because 3/2 happens to be an excellent approximation of 2^(7/12). But a trained ear can hear the difference between an even-tempered major third (ratio of 2^(1/3)) and a natural major third. Thus for natural tuning there is a preferred key; keys that are closely related to the preferred key will work acceptably, but you can't go too far away on the circle of fifths from the preferred key. In even-tempered tuning there is no preferred key, so you can start the diatonic scale on any semitone.

Question: what about the music of India, which uses vastly different scales (or at least I think it does) incorporating smaller intervals -- quarter tones?

As far as using all twelve tones, a variety of music at least attempts to. For example, "All the Things you Are" by Jerome Kern uses a chord for all twelve tones in the piece, in part by having the introduction set a half-tone below the verse. As noted above, there are the works of the serialists (Schoenberg, Berg, Webern and their followers like Boulez). However, some Chopin, Wagner and Liszt is highly chromatic; some of it may in fact include all twelve tones. In addition, Bach wrote a modulating cannon that goes through all twelve tones for "The Musical Offering," though I suppose that's cheating, since the tones aren't included at the same time--it's just a transposition.

Fascinating stuff... we have probably barely scratched the surface of the connection between language, music, and mathematics.

There are many rock songs which uses all the 12 tones. :)

Interesting article. The connection between voice frequencies and musical pitch could explain a couple of things from early Western music history. For example, this might explain why pitch notation settled fairly early on (~1000-1200CE) with a staff using the "diatonic scale", while rhythmic notation went through a variety of forms before notation standardized in the 16th and 17th centuries. Also, the concept of consonance and dissonance has changed over time. Some of the first experiments with harmony in Western music (that we know about) used octaves, fourths and fifths while thirds and sixths were initially considered more dissonant. (Our ears now accept quite complex harmonic structures as "consonant" that include a variety of diatonic and chromatic intervals.) Perhaps it was the language formants that drove those early choices.

However, I also have some questions. Echoing other comments, what about cultures that use scales other than an equal division of 12 tones? India was mentioned; another example is Arab music. (There are some references to ethnomusicology in the bibliography that might say more here.) Also, we're comparing modern languages with music that has undergone a continuous transformation for 1200 years. There is definitely a component that we like certain sounds because we hear them around us from birth. The fact that Western music hasn't strayed much outside those 12 chromatic notes certainly supports the author's thesis, but I'm curious how much of that would stem from social conditioning and how much from a voice-music connection. Unfortunately, native early medieval language speakers are difficult to obtain for comparison purposes... :-)

Following part of mikev6's comment:

IIRC, the introduction of thirds and sixths as consonant intervals (or at least as regular, non-transitional intervals) happened in the British Isles, around 1200-1400. Perhaps the Anglo dialect, or the peculiar type of French spoken by the Normans, could give us some clues. (I guess one could reconstruct the dialect?)

Same goes for older (9th century) mode classifications. (These are probably conceived for monophonic music, though.)

I guess I'm a little unimpressed by this "discovery". Human voice is harmonic, thus you would expect that the ratios among the partials would be harmonic ratios. The chromatic scale is just extending to less consonant ratios.

One other note: the second and third formant isn't really what determines the vowel; it's the amplitude of the partials within the (approximate) 500-1500 Hz range. So a male singer on a 220 hz A will have the formats at 220, 440, 660 (actually 1100/2 but there's a band at 660). A soprano two octaves up will have the first format at 880 and the second at 1760. Sopranos actually have to "cover" the vowel as they move up the scale in order to compensate for the base frequency being in the vowel definition region. It's also why sopranos are so gosh darn difficult to understand :-).

Gary Godfrey

Austin, TX

OK, I should know better than posting before tea. I badly mashed partials and formants in my comment. Now I'm wondering if there's more there...

Gary Godfrey

Austin, TX

Gary: When you have had tea, I'm curious if you can find more there.

I have often wondered this. Thanks for the explanation.

the lowest frequency formants are most important to understanding. i am going deaf, and due to explosions and infections have lost a lot of my higher frequency hearing. do you suppose that there is more than a co-incidental relationship between the the low frequency hearing going last and the low frequency formants being so critical to understanding speech?

This article is vastly under-researched. It isn't rocket science because its called psychoacoustics. Anyone who has made it through this article (especially the author) should seek the aid of the nearest musicologist. Musical intervals have been derived mathematically dating back around 500BC. There have obviously been many different tuning systems since then which divided the octave differently then we do now. Fast forwarding several hundred years, how we ended up with the current tuning solution, equal temperment, was not based on speech but on math. It was a rounding off of certain intervals to allow composers and performers to play equally in all keys on the same instrument. The affects of the major and minor mode have been studied for hundreds of years (as were the other modes) as well. There is an awful lot of music within our Western heritage, let alone the other half of the world, that is composed using all 12-tones. The major scale (but not minor - sorry Rameau) can be derived naturally from the overtone series which is probably why it exists within our voices, not the other way around. It would almost be a challenge to find a composition of worth that limited itself entirely to purely only using the 7 tones of the diatonic scale... even the most conservative composers still modulate to different keys or add some sort of chromatic flare.

For this idea to be valid, you'd have to study the speech patterns of people who have never heard music. Only then would the idea that the nature of speech predicts the music be valid. It could be just as valid the other way around... that our speech is influenced by the music we hear from birth.

I've always thought, without ever having studied the issue (a recipie for disaster), that the reason that music has an emotional aspect (minor sad, major happy, etc.) must be somehow related to the sound of people's voices when they are expressing those emotions. Somehow, a minor key taps into the same frequency patterns that characterise sad speech, or crying, or something.

Does anyone know of any research on that question?

This article seems to make the claim that "musical scales are based on human voice frequencies." However, I am skeptical: all of the natural or "perfect" harmonies are actually derived from the overtone series [ http://en.wikipedia.org/wiki/Harmonic_series_(music) ] which is just the set of natural resonant pitches of a plucked string or blown tube.

In fact, pretty much any long and thin vibrating object will produce a standard series of evenly spaced harmonics. From wind in the reeds to our own vocal cords, the harmonic series is just the sound of nature.

So are musical scales similar to the human voice because it these are the notes that sounded right to us, or are they similar because they both come from the basic physics of sound production?

Give me a break. Can someone explain how things can be otherwise? Is there a way for a tube or a string, or a throat for that matter, to resonate and produce harmonics that are not harmonic? This is physics not linguistics or musicology. Of course the harmonics of any vibrating system will have resemblances to intervals like thirds and fifths etc.

+1 for don's comment.

I'd be interested in more information on this research re just intonation vs equal temperament.

and I immediately thought of the inter-tonal subtleties of some african music.

I'm sure there's something in it,

but while I note the researcher mentions mandarin,

really this whole thinking seems euro-centric.

Maybe this fits to some extent with some of our earlier thoughts on the relation between human musicality and language: we can speak because we could sing.

http://users.ugent.be/~mvaneech/ORILA.FIN.html

Regards. Mario

While it's true that a vibrating tube can hardly avoid containing harmonics, maybe the research shows why we *prefer* such harmonics in our music. After all, there are few limits to the noises we can make (small children are especially good at this. Just give them a saucepan and a spoon) but we prefer simple harmonics.

(If the lower frequencies are vital for understanding how come we can understand a whisper, a high-frequency hiss?)

The reason we have 12 tones in the scale has been well-known for about 2500 years. It's by construction. You construct the scale by adding notes such that the ratio of their frequencies to previous frequencies can be expressed using small integers. And it isn't exactly 12 tones; it's different depending on which note you start with. We get our 12 tones by dividing an octave up into "equal" components that are good approximations of the correct tones for each of the 12 notes.

"culturally I don't know of any that do but many composers such as Arnold Schoenberg, Alban Berg, John Cage and Anton Webern composed 12 tone music."

IMHO, Berg, Cage, and Webern composed 12-tone noise, not music.

My cousin and I talked about this. He says that he notices how the shouts of a driver is usually higher pitched and it gets higher as the driver gets more upset. I notice how in most languages, your voice would go up when asking a question. Some universal thing I guess. But riddle me this: why does the Indians' scale cover more than 12 semi-tones?

Song came first in evolution. Speech came very late. The mechanism which was designed for singing and making jungle imitative sounds was later utilized for speech. It is not odd therefore for the formants of the vocal tract to show a musical scale within them. Mario Vaneechoutte and John R. Skoyles have an analysis on the net: "Song (musicality, singing capacity), we argue, underlies both the evolutionary origin of human language and its development during early childhood. Specifically, we propose that language acquisition depends upon a Music Acquiring Device (MAD) which has been doubled into a Language Acquiring Device (LAD) through memetic evolution. Thus, in opposition to the currently most prominent language origin hypotheses (Pinker, S. 1994. The Language Instinct, W. Morrow, N.Y.; Deacon, T.W. 1997. The Symbolic Species, W.W. Norton, N.Y.), we contend that language itself was not the underlying selective force which lead to better speaking individuals through natural selection. Instead we suggest that language emerged from the combination of (i) natural selection for increasingly better mental representation abilities during animal evolution (thinking, mental syntax) and (ii) natural selection during recent human evolution for the human ability to sing, and finally (iii) memetic selection that only recently (within the last 100,000 years) reused these priorly evolved abilities to create language. Thus, speech - the use of symbolic sounds linked grammatically - is suggested to be largely a cultural phenomenon, linked to the Upper Palaeolithic revolution. The ability to sing provided the physical apparatus and neural respirational control that is now used by speech. The ability to acquire song became the means by which children are able to link animal mental syntax with syntax of spoken language. Several studies strongly indicate that this is achieved by children through a melody-based recognition of intonation, pitch, and melody sequencing and phrasing. Language, we thus conjecture, owes its existence not to innate language learning competencies, but to innate music-associated ones, which - unlike the competencies hypothesized for language - can be straightforwardly explained to have evolved by natural selection.

"The question on the origin of language then becomes the question on the origin of song in modern humans or early Homo sapiens. At present our ability to sing is unexplained. We hypothesize that song capacity evolved as a means to establish and maintain pair- and group-bonding. Indeed, several convergent examples exist (tropical song birds, whales and porpoises, wolves, gibbons) where song was naturally selected with regard to its capacities for reinforcing social bonds. Anthropologists find song has this function also amongst all human societies.

"In conclusion, the ability to sing not only may explain how we came to speak, but may also be a partial answer to some of the very specific sexual and social characteristics so typical for our species and so essential in understanding our recent evolution."