This is probably the last trip into the cold atom toolbox, unless I think of something else while I'm writing it. But don't make the mistake of assuming it's an afterthought-- far from it. In some ways, today's topic is the most important, because it covers the ways that we study the atoms once we have them trapped and cooled.

What do you mean? They're atoms, not Higgs bosons of something. You just... stick in a thermometer, or weigh them, or something... OK, actually, I have no idea. They're atoms, yes, but at ultra-low temperatures and in very small numbers. You can't bring them into physical contact with anything at cryogenic temperatures, let alone room temperature, without destroying the ultra-cold sample completely. And there are so few atoms-- several billion if you're dealing with a pretty healthy MOT down to a few thousand for a small BEC-- that it would take years of operation to accumulate enough to produce a lump measurable on even a highly sensitive laboratory scale.

But if you can't touch them, how do you study them? Well, the same way we study the properties of atoms in any other context: we bounce light off them. They still absorb and emit very specific frequencies of light, and we can use that fact to detect the presence of trapped atoms, measure their temperature, and even take pictures of their distribution. Essentially everything we know about ultra-cold atoms comes from looking at the light that they absorb and re-emit.

So, basically, you turn the MOT lasers on, and take pictures like in that famous picture? That's the simplest and earliest technique people used to study laser-cooled samples, yes. And, in fact, most of the early measurements were done using fluorescence in one way or another, generally just by measuring the total amount of light scattered from the atoms. The total fluorescence is, to a first approximation at least, proportional to the number of atoms in your sample.

Could you be a little more specific? Well, the first temperature measurements were done by a "release and recapture" method: they cooled atoms in optical molasses, measured the amount of light scattered from the could, then turned off the lasers for a short time. When the light was off, the atoms would drift away from the trap, and when they turned the lasers back on, they would get less total light, because they only recaptured some of the atoms. The number recaptured depends on the amount of time the lasers were off and the velocity of the atoms, so you can use that to measure the temperature.

That makes sense. So this is how you know the atoms are really cold? It's the first way people used to do the measurements. It has a bunch of problems, though, chiefly that you need to know the size of the laser beams very well, and have a sharp end to the trapping region. That's hard to do, so those measurements had a big uncertainty.

When the first sub-Doppler temperatures were measured, the NIST group didn't believe that they could possibly be getting atoms as cold as their initial results suggested, so they invented a bunch of other ways to measure the temperature. The best of these was the time-of-flight method, which became the new standard for a while.

How does that work? Well, you turn off the lasers that do the trapping and cooling, and turn on a single beam, positioned a bit below the trap. The released atoms will fall down through this beam, and when they do, they will absorb and re-emit light. If you measure the total amount of fluorescence from this laser as a function of time, you can use that to get the average speed of the atoms in the sample.

You can? Yep. See, the atoms in the trap are all moving in random directions, so some of them will be headed down, and some headed up. The ones headed down will get to the beam a little early, while the ones headed up with straggle in later. This gives you a fluorescence signal that has some "width" in time that's related to the spread in vertical velocities, which is the temperature.

You said it was the standard for a while, implying that it's not, now. Why is that? Well, the time-of-flight method is way better than release-and-recapture, but it still has problems. The time that you see depends a bit on the width of the beam, so you need to know the size and shape of the laser pretty well. The signal you get from any of these florescence based techniques also depends on the intensity of the laser, and any scattered light from elsewhere in the chamber-- so a little bit of flickering in the beam or a scratch on a window can throw things off. In the mid-90s, most diagnostics shifted to an imaging-based method instead, that involves taking pictures of the atoms.

So you take pictures of the light scattered by the cloud? Actually, the reverse-- you take pictures of a laser, and look at the light that's missing because the atoms absorbed it.

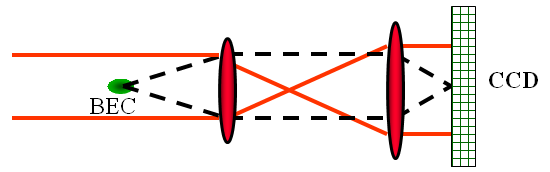

Excuse me? You look at light that isn't there? Right. The scheme looks like this:

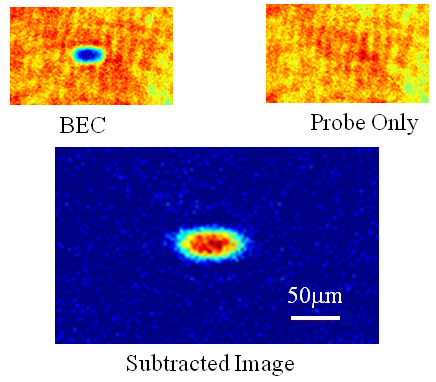

You take a single collimated beam of light at the frequency the atoms want to absorb, and shine it straight through the cloud of atoms onto a camera (with some lenses in there to do any magnification that you might want). The atoms will absorb light from the beam, and scatter it out randomly in other directions, so they cast a sort of "shadow" in the beam. The depth of the "shadow" depends on the total number of atoms along a line running through that part of the cloud, so you can get a density profile of the atom cloud. The data you collect look like this:

(which is also the "featured image" up above). You take two pictures: one image of the laser beam by itself, and one of the laser beam with the atoms present. Then you take a difference between those two images (subtracting pixel values in the software program of your choice), and that gives you an image of the density profile of the cloud of atoms.

Hey, that's pretty nice. Why didn't they do this from the beginning? Well, I suspect technology has a lot to do with that-- the method needs a decent CCD camera, which didn't start to get cheap until around the time I entered grad school. This method actually dates from pretty much my start at NIST, actually- the optical control of collisions paper that was my first article in grad school used to get cited a bunch for explaining this method; I'm not sure we were the first to do it, but we were one of the first in print.

So, these pictures are useful, then? Oh, absolutely. There's a ton of information in these-- you get the complete density profile, so you can extract an accurate measurement of the size of the cloud, and thus the peak density in absolute terms. You can measure the temperature by taking several of these images at different times after turning off the trap lasers, and watching the cloud expand-- the change in shape tells you the average velocity. You can even detect some cool effects by the way they change the density profile.

Such as what? Well, Bose-Einstein condensation. The famous three-peak image that headed the evaporative cooling post shows the first detection of BEC, which was detected by the density profile. Here, I'll put it in again:

The signature image of a cloud of rubidium atoms crossing the BEC transition, from the Nobel Prize site.

The signature image of a cloud of rubidium atoms crossing the BEC transition, from the Nobel Prize site.

This is a set of three density profiles turned into three-dimensional pictures for gosh-wow value. The picture on the left shows a cloud at a temperature above the BEC transition, and is basically a smooth-ish lump of atoms in a mostly spherical cloud. The middle picture, though, has a big blue-white spike sticking up in the center-- that's the BEC. The condensate is at a much, much lower temperature (in terms of the thermal velocity of its atoms) than the original cloud, and shows up as a very dense lump in the center. It's also lopsided, because the TOP trap they used is not spherically symmetric, and the shape of the BEC spike corresponds nicely to the shape of their trap, as it should.

And the picture on the right? That's a cloud well below the transition temperature. Where the middle picture, just barely below the transition, has a two-part profile-- the smooth thermal cloud and the sharp BEC spike-- the third picture is pretty much just BEC. Showing that you can make nearly pure condensates by evaporative cooling in the magnetic trap.

Very nice. One question, though: Why did they color empty space red, and the condensate blue-white? I have no idea. They're about the only people ever to use that color map. Everybody else uses blue/black for empty space, and orange-yellow for the condensate.

And this is the standard technique for measuring BEC? It gets the basic idea, yes. There are some refinements to it-- the absorption technique is destructive, in that the condensate atoms have to absorb light to be detected, and when they do, they get a momentum kick that blasts the condensate apart. So if you want to measure time--dependent behavior this way, you need to piece it together from lots of individual images of different condensates.

You can improve on this somewhat with a couple of other techniques that basically just reduce or eliminate the photon scattering by the BEC-- there's a "phase contract" technique, for example, that relies on using off-resonant light (so the condensate atoms don't absorb) and treating the condensate like a medium with some index of refraction that phase-shifts the light passing through it. With a bit of clever optical design, you can turn that into a "non-destructive" image of the condensate, which the Ketterle group pioneered and used to do the first in-situ studies of condensates in traps.

The basic idea of all of this is the same, though: you shine a laser through the condensate onto a camera, and use its effect on the light to take a picture of the density profile of the cloud.

And this is the only tool you have for measuring things? Pretty much. But it's a very powerful tool-- most of the things you'd like to see cause changes in the density profile. This slightly outdated image collection from MIT for example, has some nice pictures of vortices, where the rotational motion of a condensate flowing in a trap show up as "holes" in the density distribution. You can also turn position measurements into momentum mesaurements by giving the cloud a really long time to expand-- at that point, the position of the atoms is really a measurement of their initial momentum, which determines what direction they move and how quickly.

The imaging technique can also be state selective, because different atomic states will absorb slightly different frequencies. So you can get pictures that show each of two different states separately, or that tag the state in some way. That's essential if you want to do quantum computing sorts of experiments.

There are still experiments using fluorescence imaging, too-- most of the direct-imaging studies of atoms in optical lattices use fluorescence to detect the individual atoms. And if all you care about is a single number, fluorescence detection is much simpler and faster, so atomic clocks tend to use that.

But it's all light scattering, all the time? For certain types of experiments, there are other kinds of detection that work-- some kinds of collisions produce charged particles, for example, and those can be detected with high efficiency. The metastable atoms I studied in grad school have enough energy to knock electrons loose from a surface, so we used to detect them by dropping them on a micro-channel plate. But those are really special cases. The vast majority of what we know about the physics of cold atoms comes from shining light on them and looking at either what comes out, or what doesn't come out.

But then, this isn't too surprising, as the vast majority of everything that we know about atoms comes from bouncing light off them. Cold-atom physics just has a couple of specialized methods for doing this, that happen to work well because the atoms really aren't moving very much at all.

So, that's it for this series, then? Pretty much, I think. There are some other tricks that turn up occasionally in the field, but I think we've covered all of the really general stuff that's shared by nearly all cold-atom experiments. I hope this will end up being a useful resource-- if nothing else, it gives me a set of links that I can paste into future posts when some core technique is important but I don't want to take time to explain it.

You should also do a trading-card version, dude. Some of these are pretty long. Yeah, well, I like to talk. But we'll see about doing a quick-reference variant. In my copious free time.

But for now, that's it. I hope this has been helpful and/or interesting.

Thanks for doing this series, it's been really interesting to follow along. Keep up the blogging, you explain this stuff really well.

This series has been good, yes. I didn't know about the density profile imaging method -- is there a simple term for that method?