I continue to struggle to avoid saying anything more about the Hugo mess, so let's turn instead to something totally non-controversial: gender bias in academic hiring. Specifically, this new study in the Proceedings of the National Academy of Science titled "National hiring experiments reveal 2:1 faculty preference for women on STEM tenure track" with this calm, measured abstract that won't raise any hackles at all:

National randomized experiments and validation studies were conducted on 873 tenure-track faculty (439 male, 434 female) from biology, engineering, economics, and psychology at 371 universities/colleges from 50 US states and the District of Columbia. In the main experiment, 363 faculty members evaluated narrative summaries describing hypothetical female and male applicants for tenure-track assistant professorships who shared the same lifestyle (e.g., single without children, married with children). Applicants' profiles were systematically varied to disguise identically rated scholarship; profiles were counterbalanced by gender across faculty to enable between-faculty comparisons of hiring preferences for identically qualified women versus men. Results revealed a 2:1 preference for women by faculty of both genders across both math-intensive and non–math-intensive fields, with the single exception of male economists, who showed no gender preference. Results were replicated using weighted analyses to control for national sample characteristics. In follow-up experiments, 144 faculty evaluated competing applicants with differing lifestyles (e.g., divorced mother vs. married father), and 204 faculty compared same-gender candidates with children, but differing in whether they took 1-y-parental leaves in graduate school. Women preferred divorced mothers to married fathers; men preferred mothers who took leaves to mothers who did not. In two validation studies, 35 engineering faculty provided rankings using full curricula vitae instead of narratives, and 127 faculty rated one applicant rather than choosing from a mixed-gender group; the same preference for women was shown by faculty of both genders. These results suggest it is a propitious time for women launching careers in academic science. Messages to the contrary may discourage women from applying for STEM (science, technology, engineering, mathematics) tenure-track assistant professorships.

Especially not those last two sentences. Nope, not provocative in the least...

I actually knew this one was coming, having been alerted to it by a colleague when I last wrote about gender in academic hiring. So, what is the deal with this?

Well, as is often the case, this is a paper study-- they sent a bunch of faculty in four different fields (engineering and economics, where women are badly underrepresented, and biology and psychology where they're not) "hiring committee reports" on sets of three imaginary candidates for a faculty position and asked them to rank the three candidates. Two of these were the experimental test, the third a "foil" deliberately designed to be somewhat weaker than the other two; the "foil" was nevertheless chosen first in about 2% of the tests, which contrary to the popular saying, lets you precisely account for tastes...

The test candidates were drawn from a set of 20 packets randomizing a bunch of features, but all designed to be equally strong. The most important random feature was the pronoun used for the candidate (some got male pronouns, others female; if I read this correctly, none got names), but they also varied "lifestyle" factors: marital status, number of children, past parental leaves (these were mentioned as spontaneously offered by the candidate in the "report" from the "search committee"). One other important feature was that they varied the specific adjectives in the letters of recommendation, describing some candidates with words most commonly associated with letters for men ("analytical, ambitious, independent, stands up under pressure, powerhouse") and others with words generally associated with letters for women ("imaginative, highly creative, likeable, kind, socially skilled"). They swapped pronouns on both sets of letters, so that some "women" got "male" adjectives, and some "men" got "female" ones.

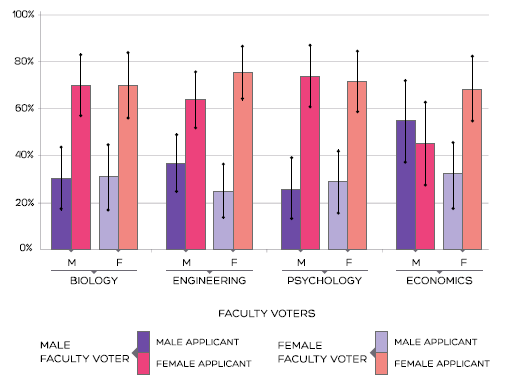

Each test got one fictitious woman and one fictitious man from the experimental set to rate; the "foil" was always a man. Taking out the tiny fraction of oddballs who picked the foil, they found that the paper reports identified as women were preferred by a two-to-one margin, across the full range of conditions. The only sub-group of faculty who didn't have a strong preference for the woman in the test sample were male economists, who were slightly pro-male (55%-45%) in contrast to their female colleagues (68%-32% in favor of the woman).

Fig. 1 from the paper described in the text, showing the percentage of faculty rating each of the test candidates as their first choice for different fields and genders.

Fig. 1 from the paper described in the text, showing the percentage of faculty rating each of the test candidates as their first choice for different fields and genders.

In follow-on experiments they try to sort out a bunch of the individual factors involved-- presenting faculty with full fake CV's rather than just "search committee reports," varying just the family status of the candidates, and asking their test faculty to rate just a single candidate rather than doing a head-to-head comparison. There are a few small quirks-- this is, after all, social science-- but the general result is very consistent: when asked to evaluate this set of paper candidates, the faculty in their sample show a strong preference for hiring women.

This runs counter to a lot of conventional wisdom, and just as with the abstract, the concluding discussion features some language that borders on the combative regarding the current state of academic hiring, including the sweeping claim that:

Our experimental findings do not support omnipresent societal messages regarding the current inhospitability of the STEM professoriate for women at the point of applying for assistant professorships (4–12, 26–29). Efforts to combat formerly widespread sexism in hiring appear to have succeeded. After decades of overt and covert discrimination against women in academic hiring, our results indicate a surprisingly welcoming atmosphere today for female job candidates in STEM disciplines, by faculty of both genders, across natural and social sciences in both math-intensive and non–math-intensive fields, and across fields already well-represented by women (psychology, biology) and those still poorly represented (economics, engineering).

From a slightly more impartial perspective, though, there's one obvious way to reconcile these results with the picture of academic hiring as highly biased, which has to do with a difference in experimental design. The much-cited big paper-resume study showing bias in favor of male students was deliberately designed to use kind of marginal candidates in their fake resumes-- good-but-not-great grades, and so on. This study, on the other hand, uses fake candidates who are carefully designed to be equally strong-- that is, they present the faculty in their sample with a difficult choice between two excellent candidates.

The obvious way to reconcile these is to push the gender bias back to an earlier stage in the process. That is, to say that while this study may show a strong preference for hiring women who are rated as exceptionally qualified, bias in letter-writing, interviewing, etc. mean that very few actual women are rated this highly in real hiring situations, so the direct head-to-head comparison they test here only rarely comes into play in the "real world."

They sort of attempt to address this with the adjective thing I mentioned earlier (though I don't see any detailed breakdown of those results in there). The probably more useful counter (or, I guess, counter-counter-argument) is in the data they give about actual hiring rates, namely that while women are less likely to apply for faculty jobs, they get hired into assistant professor positions at about the same rate that they get Ph.D.'s. That would allow you to reconcile this study's strong preference for highly qualified women with previous studies' tendency to under-rate women. The former basically undoes the latter, ending up with hiring that is mostly neutral in a statistical sense.

Which, I guess, is the next angle for a paper-resume study: put together packages that under-rate the imaginary female candidates, and see what happens then.

In terms of allocating grant funding, though, it might be better to put together a study of why male economics professors are such assholes relative to their colleagues in other fields...

The most obvious limitation of this study is that it isn't "live". The experimental test subjects can rate the candidates based on how they want to present themselves to the experimenters, not based on whom they actually want in their departments. If you have a bias against women (or whoever else) you might be disinclined to actually vote to hire one in your department, but you will probably nonetheless have no problem trying to persuade an experimenter that you are unbiased.

As to why anonymous test subjects might lie, well, first, I have been an anonymous subject in asocial science study. Anonymity, non-disclosure forms, IRB protocols, etc. are weak sauce compared to the powerful compunction to not reveal the darker aspects of yourself. You think I want to reveal all of my darkest aspects to some grad student that I don't know? It's hard. Second, if you want to persuade the world that your field is not biased against women, being a test subject who gives the "right" answers is a good way to do that.

To be fair, I suspect that the researchers are aware of these limitations, and are fully aware that that's why social science is friggin' hard. All that they can report is what they find after doing what they can to try to minimize the effects of these limitations.

Not to mention that there may be peer and/or dean-level pressure to hire women for tenure-track positions. So when the experimenters have gone to such great lengths to make the packages comparable, it may just be socially easier to take the woman.

Another factor is that, while my experience is limited to physics faculty searches, the experimental protocol better reflects how departments come up with their shortlists than how they actually decide which candidate to hire. I could see how faculty might go to some lengths (especially due to peer or dean-level pressure) to ensure that at least one of the shortlisted candidates is female. The shortlisted candidates are then typically brought in for an interview, where any gender bias can be more easily hidden behind plausibly non-gender-biased reasons for preferring a candidate who happens to be male: perceived quality of the seminar/colloquium, cultural fit in the department, or even "I do not like thee, Dr. Fell/The reason why I cannot tell". The study may be more representative of hiring in fields where candidates are generally interviewed at major meetings (this is more typical of social science and humanities fields), where faculty who did not go to the meeting will not have had a chance to meet all of the candidates.

The problem with the "it's not live" argument is that the same reasoning should apply to the other studies that did show bias. The process is basically the same, after all. But it's not usually raised there, because that result is more in line with what people expect, and thus doesn't seem odd enough to need an explanation. They also get at this a bit by asking for ratings of single candidates, without the direct comparison that might produce an "oh, right, I'm supposed to choose the woman" reaction to skew things. And, again, there are the data on actual hiring, as discussed previously.

I definitely agree that this process is a bit more like the short-list selection than final hiring, and I'm a little surprised that wasn't mentioned in the paper (at least, not that I noticed).

The problem with the "it's not live" argument is more difficult than comparing to other studies, because it's time and context-dependent knowledge. What do we know whether the people in this study were more or less alerted that this is a female-bias test than in the other studies? We don't. Sociology is a mess because we're dealing with study subjects that quickly learn, and now we're discussing higher order effects - in a series that probably doesn't converge. See, now people can go: oh, we're actually biasing in favor of women. So women have it far too easy and we should hire more men. But wait, then we're back to the original problem, etc.

Needless to say, the only fixed point in this whole positive-feedback mess is equal opportunity, which is why I am strongly opposed to all support that goes exclusively to women, except in cases where there really is a biological reason to (read: doesn't make much sense to offer maternity leave or breast-feeding space to a man).

Yeah, there's the tricky question of how much the informed-consent paperwork skews things by letting people know what the question being studied is. I actually had a conversation about exactly this kind of thing with a colleague from Psychology just the other day. The only response I can offer is basically what he said, namely that every study these days has the same issue, so they just have to hope it all washes out, and results can be fairly compared.

I think there's a reasonable case to be made that there should be some preference for hiring women, at least until the current faculty distribution has a little more balance. But then that mostly applies to heavily male fields like physics; I'm not sure what to do about fields like biology and psychology. Happily, I'm neither a biologist nor a psychologist...

"Go ladies! Go ladies!" As a woman, I really do not mind being on the "upside" of this bias case, but is this study not missing a very important point? Affirmative action is currently forcing educational institutions to consider woman applicants before men applicants, it may be bias, but it is law.

Being a woman myself obviously I would normally be satisfied hearing news like this, but this is not how it should be. When hiring employees you should be looking at who would be able to do the job better, who's qualifications is best suited for the position at hand, and then who will contribute to your company's positive growth. Also related to subjects like these are races, and the same answer emerges, it does not matter what race color or sex the candidates are, they should be hired based on what they bring to the table!

I strongly agree with the above mentioned comment. Companies should hire people according to whom will bring good results to the company and not on gender. Yes women have been deprived and they were limited to the things they should do. Now things have changed and we live in a free country where there is no sexism, and it should be practiced to all the companies. 13175662

In the past males were more favoured to get a job.There is no way to bridge that gap unless gender equality is followed.The past needs to stay exactly where it is so that we may see a way forward that is not negatively influenced.

there is nothing owed to females because of the great imbalance that was happening years ago.Males do not have to feel guilty either because what happened happened and nobody can turn back the time.

The moment people are hired its because they are qualified and experienced for that available position, regardless of the gender. But the given regulations in companies this days is that there has to be a balance of this genders. Though we know that in the world, the population of females is greater than that of males, so this can only mean that females will be more dominant in anything.

All this just makes me so proud to be a woman. We have come a long way and it is great to see that it is finally starting to pay off.The scales are tipping in our favour and It is starting to sink in that we are very capable of things that males especially dominate in, even the possibility of being better. 28276362

Women have been deprived of opportunities in the workplace in the past. I am proud hence I am a woman myself to finally see that women are finally getting the respect they so rightfully deserve. We have women in high positions in this era. Women have proven they are capable of achieving everything they put their mind into, Now, I ask myself, can we expect to have a female president in the near future? Will the nation allow to be led by a female leader?

u14248043

I agree with some of the above comments. Democracy is a real thing and it should be in the favor of equality. The person with the best qualifications and best C.V. should get the job. Period.

This is definitely a case of trying to create a balance. In South Africa during Apartheid non-white people were forbidden by law to go to university. As a result black people could not become engineers,economist,doctors and other academic professions. Post Apartheid the South African government created laws to redress the inequalities created by Apartheid by requiring businesses to hire non-white people(blacks,Indians,coloureds) in preference to white people. This is done to achieve equal representation of designated groups in the workplace.

'Go ladies!' We as a society have come a long way from the previous bias towards men in the work place. It feels great to know that the science community (and others) are on board with this. For once i dont mind being the talked about topic as a woman. We as a society after all strive for gender equality- what better way to help the female side of things? Just a pity the study isn't live-should definitely be repeated. I am all for it!

Personally i can only be proud of something i got on merit.As long as no one is actively discouraing women to persue higher positions then there absolutely no need for bias..13237579

This comment is for an assignment with regard to University of Pretoria. I feel that this topic is similar with regard to BEE in the workplace. Woman were also previously disadvantaged, however I still stand by equal opportunity regardless of the past. If you are the more capable candidate for the position, regardless of gender, race and ethnicity you should be the "chosen one". I myself am a woman and feel that I would prefer to receive the position based on my qualifications rather than gender status.

u15004814

I fully agree with the comment of Marcelle de Jong. Women for many decades have pulled on the shorter edge when relating to job positions. I am a student of the University of Pretoria and to show a contradictory to the above bias, I can truly say that all my lecturers of my core subjects are highly educated males. But I believe that people should be appointed by their qualifications and abilities and therefore employers should be blind against any factors such as race, sex and culture groups.

[This below comment is a response to Dr. Zuleyka Zevallos’s critique of the PNAS study on STEM faculty hiring bias by Wendy Williams and Stephen Ceci. http://othersociologist.com/2015/04/16/myth-about-women-in-science/]

Zuleyka, thank you for your engaging and well researched perspective. On Twitter, you mentioned that you were interested in my take on the study’s methods. So here are my thoughts.

I’ll respond to your methodological critiques point-by-point in the same order as you: (a) self-selection bias is a concern, (b) raters likely suspected study’s purpose, and (c) study did not simulate the real world. Have I missed anything? If so, let me know. Then I’ll also discuss the rigor of the peer review process.

As a forewarning to readers, the first half of this comment may come across as a boring methods discussion. However, the second half talks a little bit about the relevant players in this story and how the story has unfolded over time. Hence, the second half of this comment may interest a broader readership than the first half. But nevertheless, let’s dig into the methods.

(a) WAS SELF-SELECTION A CONCERN?

You note how emails were sent out to 2,090 professors in the first three of five experiments, of which 711 provided data yielding a response rate of 34%. You also note a control experiment involving psychology professors that aimed to assess self-selection bias.

You critique this control experiment because, “including psychology as a control is not a true reflection of gender bias in broader STEM fields.” Would that experiment have been better if it incorporated other STEM fields? Sure.

But there’s other data that also speak to this issue. Analyses reported in the Supporting Information found that respondents and nonrespondents were similar “in terms of their gender, rank, and discipline.” And that finding held true across all four sampled STEM fields, not just psychology.

The authors note this type of analysis “has often been the only validation check researchers have utilized in experimental email surveys.” And often such analyses aren’t even done in many studies. Hence, the control experiment with psychology was their attempt to improve prior methodological approaches and was only one part of their strategy for assessing self-selection bias.

(b) DID RATERS GUESS THE STUDY’S PURPOSE?

You noted that, for faculty raters, “it is very easy to see from their study design that the researchers were examining gender bias in hiring.” I agree this might be a potential concern.

But they did have data addressing that issue. As noted in the Supporting Information, “when a subset of 30 respondents was asked to guess the hypothesis of the study, none suspected it was related to applicant gender.” Many of those surveyed did think the study was about hiring biases for “analytic powerhouses” or “socially-skilled colleagues.” But not about gender biases, specifically. In fact, these descriptors were added to mask the true purpose of the study. And importantly, the gendered descriptors were counter-balanced.

The fifth experiment also addresses this concern by presenting raters with only one applicant. This methodological feature meant that raters couldn’t compare different applicants and then infer that the study was about gender bias. A female preference was still found even in this setup that more closely matched the earlier 2012 PNAS study.

(c) HOW WELL DID THE STUDY SIMULATE THE REAL WORLD?

You note scientists hire based on CVs, not short narratives. Do the results extend to evaluation of CVs?

There’s some evidence they do. From Experiment 4.

In that experiment, 35 engineering professors favored women by 3-to-1.

Could the evidence for CV evaluation be strengthened? Absolutely. With the right resources (time; money), any empirical evidence can be strengthened. That experiment with CVs could have sampled more faculty or other fields of study. But let’s also consider that this study had 5 experiments involving 873 participants, which took three years for data collection.

Now let’s contrast the resources invested in the widely reported 2012 PNAS study. That study had 1 experiment involving 127 participants, which took two months for data collection. In other words, this current PNAS study invested more resources than the earlier one by almost 7:1 for number of participants and over 18:1 for time collecting data. The current PNAS study also replicated its findings across five experiments, whereas the earlier study had no replication experiment.

My point is this: the available data show that the results for narrative summaries extend to CVs. Evidence for the CV results could be strengthened, but that involves substantial time and effort. Perhaps the results don’t extend to evaluation of CVs in, say, biology. But we have no particular reason to suspect that.

You raise a valuable point, though, that we should be cautious about generalizing from studies of hypothetical scenarios to real-world outcomes. So what do the real-world data show?

Scientists prefer *actual* female tenure-track applicants too. As I’ve noted elsewhere, “the proportion of women among tenure-track applicants increased substantially as jobseekers advanced through the process from applying to receiving job offers.”

https://theconversation.com/some-good-news-about-hiring-women-in-stem-d…

This real-world preference for female applicants may come as a surprise to some. You wouldn’t learn about these real-world data by reading the introduction or discussion sections of the 2012 PNAS study, for instance.

That paper’s introduction section does acknowledge a scholarly debate about gender bias. But it doesn’t discuss the data that surround the debate. The discussion section makes one very brief reference to correlational data, but is silent beyond that.

Feeling somewhat unsatisfied with the lack of discussion, I was eager to hear what those authors had to say about those real-world data in more depth. So I talked with that study’s lead author, Corinne Moss-Racusin, in person after her talk at a social psychology conference in 2013.

She acknowledged knowing about those real-world data, but quickly dismissed them as correlational. She had a fair point. Correlational data can be ambiguous. These ambiguous interpretations are discussed at length in the Supporting Information for the most recent PNAS paper.

Unfortunately, however, I’ve found that dismissing evidence simply because it’s “correlational” can stunt productive discussion. In one instance, an academic journal declined to even send a manuscript of mine out for peer review “due to the strictly correlational nature of the data.” No specific concerns were mentioned, other than the study being merely “correlational.”

Moss-Racusin’s most recent paper on gender bias pretends that a scholarly debate doesn’t even exist. Her most recent paper cites an earlier paper by Ceci and Williams, but only to say that “among other factors (Ceci & Williams, 2011), gender bias may play a role in constraining women’s STEM opportunities.”

dx.doi.org/10.1177/0361684314565777

Failing to acknowledge this debate prevents newcomers to this conversation from learning about the real-world, “correlational” data. All data points should be discussed, including both the earlier and new PNAS studies on gender bias. The real-world data, no doubt, have ambiguity attached to them. But they deserve discussion nevertheless.

WAS THE PEER REVIEW PROCESS RIGOROUS?

Peer review is a cornerstone of producing valid science. But was the peer review process rigorous in this case? I have some knowledge on that.

I’ve talked at some length with two of the seven anonymous peer reviewers for this study. Both of them are extremely well respected scholars in my field (psychology), but had very different takes on the study and its methods.

One reviewer embraced the study, while the other said to reject it. This is common in peer review. The reviewer recommending rejection echoed your concern that raters might guess the purpose of the study if they saw two men and one woman as applicants.

You know what Williams and Ceci did to address that concern? They did another study.

Enter data, stage Experiment 5.

That experiment more closely resembled the earlier 2012 PNAS paper and still found similar results by presenting only one applicant to each rater. These new data definitely did help assuage the critical reviewer’s concerns.

That reviewer still has a few other concerns. For instance, the reviewer noted the importance of “true” audit studies, like Shelley Correll’s excellent work on motherhood discrimination. However, a “true” audit study might be impossible for the tenure-track hiring context because of the small size of academia.

The PNAS study was notable for having seven reviewers because the norm is two. The earlier 2012 PNAS study had two reviewers. I’ve reviewed for PNAS myself (not on a gender bias study). The journal published that study with only myself and one other scholar as the peer reviewers. The journal’s website even notes that having two reviewers is common at PNAS.

http://www.pnas.org/site/authors/guidelines.xhtml

So having seven reviewers is extremely uncommon. My guess is that the journal’s editorial board knew that the results would be controversial and therefore took heroic efforts to protect the reputation of the journal. PNAS has come under fire by multiple scientists who repeatedly criticize the journal for letting studies simply “slip by” and get published because of an old boy’s network.

The editorial board probably knew that would be a concern for this current study, regardless of the study’s actual methodological strengths. This suspicion is further supported by some other facts about the study’s review process.

External statisticians evaluated the data analyses, for instance. This is not common. Quoting from the Supporting Information, “an independent statistician requested these raw data through a third party associated with the peer review process in order to replicate the results. His analyses did in fact replicate these findings using R rather than the SAS we used.”

Now I embrace methodological scrutiny in the peer review process. Frankly, I’m disappointed when I get peer reviews back and all I get is “methods were great.” I want people to critique my work! Critique helps improve it. But the scrutiny given to this study seems extreme, especially considering all the authors did to address the concerns such as collecting data for a fifth experiment.

I plan on independently analyzing the data myself, but I trust the integrity of the analyses based on the information that I’ve read so far.

SO WHAT’S MY OVERALL ASSESSMENT?

Bloggers have brought up valid methodological concerns about the new PNAS paper. I am impressed with the time and effort put into producing detailed posts such as yours. However, my overall assessment is that these methodological concerns are not persuasive in the grand scheme. But other scholars may disagree.

So that’s my take on the methods. I welcome your thoughts in response. I doubt this current study will end debate about sex bias in science. Nor should it. We still have a lot to learn about what contexts might undermine women.

But the current study’s diverse methods and robust results indicate that hiring STEM faculty is likely not one of those contexts.

Disclaimer: Ceci was the editor of a study I recently published in Frontiers in Psychology. I have been in email conversation with Williams and Ceci, but did not send them a draft of this comment before posting. I was not asked by them to write this comment.

dx.doi.org/10.3389/fpsyg.2015.00037

Women should be receiving the same benefits as men. At, the end of the day the qualifications are the same meaning that both genders are capable of doing the same job so i do not understand why men should receive more benefits for the same job.15094139